Cognitive Support for Systems Engineering

Jim Brander

Interactive Engineering Pty Ltd

Sydney Australia

Abstract

A

system for reading system specifications and converting them into an active structure is

described, as well as the extensions to Constraint Reasoning to allow its use on a complex

dynamic construct. The resulting structure is intended to retain the entire meaning of the

specification. Some of the problems that needed to be overcome are mentioned, as well as

the benefits of using the same structure and methodology throughout the process. The

possible effects on the Systems Engineering process are noted.

Introduction

Specifications can be complex, and people are limited in the complexity they can handle

– they can handle no more than six to nine pieces of information in play at once.

When a person encounters a new specification, this limit is overwhelmed and many mistakes

are made. There would appear to be a need for a system to support requirements analysis by

automatically extracting semantics from specifications. We have used a small aerospace

specification as a test bed, the representation being active in reading the document and

extending itself. A dialogue between characters muffles the sound of shibboleths

crumbling.

A Story

Simplicio: (rushing into HR store) This new

specification is a real problem. It has ten thousand things to think about. What have you

got for me?

Septimus: Our best model does nine.

Simplicio: Nine thousand – that might do at a pinch.

Septimus: No, nine. It can only handle nine things at once. It will just assume nothing

else changes while it works.

Simplicio: But that’s no good. We know lots of things will be changing.

Septimus: It also points out “I am only human” at regular intervals. You can

claim ceteris paribus – that excuse has worked for economists for decades.

Simplicio: Yes, I can see how well that works. Everything works until it doesn’t, and

then it crashes and burns. We need to do better.

Septimus: Well, there is our Sixpack model.

Simplicio: If nine is near useless, wouldn’t six be worse.

Septimus: Unlike our Nine model, Sixpack is a combination of a

person and a machine. The person handles the more subtle aspects, the machine monitors

everything that can change and controls the interaction with the person to prevent

overload, so six pieces of information in play from the person is enough.

Simplicio: What’s the catch? Is it more expensive?

Septimus: No, quite a lot cheaper, and without the tantrums. It does take more time to get

started, because Sixpack needs to know all about the specification, whereas a Nine will

already be making decisions after learning about 0.1% of your problem. At that point, it

has already gone into overload, with ten things to worry about.

Simplicio: How does the machine know what can change?

Septimus: It reads the specification.

Simplicio: But I thought machines couldn’t read text.

Septimus: In general they can’t, but specification text is unusual. The language is

relatively formal and “dumbed down” (see Appendix),

it doesn’t break off mid sentence and start talking about what was on TV last night,

the domain is well-bounded, there should have been an effort on the writer’s part to

minimise ambiguity, and both the person and the machine learn together, with the person

helping the machine read the specification, and vice versa. Is there sufficient value in

the specification to make that worthwhile?

Simplicio: The specification covers a billion dollar job, and there were fearful blunders

the last time we attempted it, so there won’t be any worries about a bit of a delay

– what are you talking – days, months, years?

Septimus: It might take a few weeks to put in enough knowledge for a big specification

– what typically happens then is the specification goes back to the drawing board to

fix all the mistakes Sixpack has identified – mistakes that would otherwise have

gotten out into the project. Then everyone can see much more clearly what is involved. And

when I say “see”, I mean see, because the machine can show a graphical

representation of everything in the specification – all the relations and their

interactions. We were talking about a maximum of nine things before. Most engineers are

weak when it comes to words, whereas if you can use their visual channel to show them the

interdependencies, they are very strong.

Simplicio: What happens with changes? If there is a visual representation, won’t it

become out of date, like all those CPM diagrams I see pinned up, where nobody can be

bothered going back and changing the model?

Septimus: The machine reads the new text and changes its internal structure – the

visual representation is just a display of that internal structure- maybe half an hour,

depending on the size. That’s the point of reading text – it is far faster than

any other approach to changing structure.

Simplicio: Why didn’t you mention Sixpack first.

Septimus: Our Nines have their pride – they would rather do things using their native

ability - they still haven’t come to terms with cognitive assistance from a machine,

probably never will. We look down on Sixpack, call the person

Joe behind their back. We only use Sixpack where there is no other choice.

Simplicio: We have to certify that the system is safe to operate. Having

ten thousand things able to change and a control unit limited, at best, to nine things

changing at once sounds decidedly unsafe. How did we get away with it for so long?

Septimus: There seemed no alternative, or at least up until we got this new model.

Simplicio: Wait until I tell people we can do the job properly at last.

Septimus: Can I mention that Sixpack comes from our project management offerings –

people don’t get upset about a machine doing that sort of boring work.

Simplicio: Oh. So I shouldn’t say anything?

An Analogy

In attempting to improve the outcomes

of projects, it became clear in the 1960s that people could not manage more than about

fifty activities without some tool to assist them – they would become confused at the

interplay of the different activities as the durations changed. A tool – Critical

Path Method – was developed to assist in planning of projects. It provides a time

model of the activity interactions, and projects with thousands of activities are

regularly planned with it. It is fairly crude, as it does not allow for the free interplay

of time and cost and risk in its algorithm, but it was a considerable improvement on

unassisted human planning. The analogy with building a model for specifications is not

perfect – activities can be treated as largely independent (they may share resources,

but if they are too strongly interdependent, the model breaks down), while the objects of

a specification will usually be strongly interdependent. Concentration on just one aspect

of the relations among the objects – say one of propositional, existential or

temporal logic, or the hierarchy of objects, would seem to be a mistake, as it is the

holistic nature of the specification that needs to be modelled, there being no equivalent

in a specification of the simple and powerful metric of the early finish date of a

project. The analogy of a model used as a tool to understand the real situation, where the

model is used in more than one direction, would seem pertinent.

Another example of a problem

overwhelming human ability is flying a helicopter. A helicopter pilot can be very busy, to

the point now where the most hazardous manoeuvres are undertaken on auto pilot. We are not

suggesting an algorithm of this kind – it takes too long to write, it is narrowly

focused, relies on some very strong assumptions (which need only remain valid for a few

minutes) and is moving the upper limit to no more than nine (a person can still make the

manoeuvre, just not with high reliability). But the same problem arises with

specifications – if a person is overloaded in preparing or examining a specification,

because there are too many things in play at once, the result can be far more costly or

deadly than a helicopter crash, while causation is much harder to track down.

Systems Engineering

Before some readers scream

“Artificial Intelligence!” and start rushing for the exits, we will try to

convince them that this is the domain of Systems Engineering. We will require a massively

complex and intricate system, with thousands of pieces of language machinery all working

smoothly in unison, encountering situations that cannot be predicted, and requiring to

modify and extend itself as it deals with those situations. As the dialog above outlined,

the system should not just emulate the abilities of people, but have a quite different set

of abilities. This sounds like Systems Engineering, or at least the engineering of a

complex system. We understand that practitioners of SE may not wish to deal with a

system that is vague, unpredictable, with very narrow focus and severely limited in its

capabilities – people, in other words. We promise the system we describe is

deterministic (that is, predictable in its behaviour), although other aspects, such as

self-extensibility, may be unfamiliar.

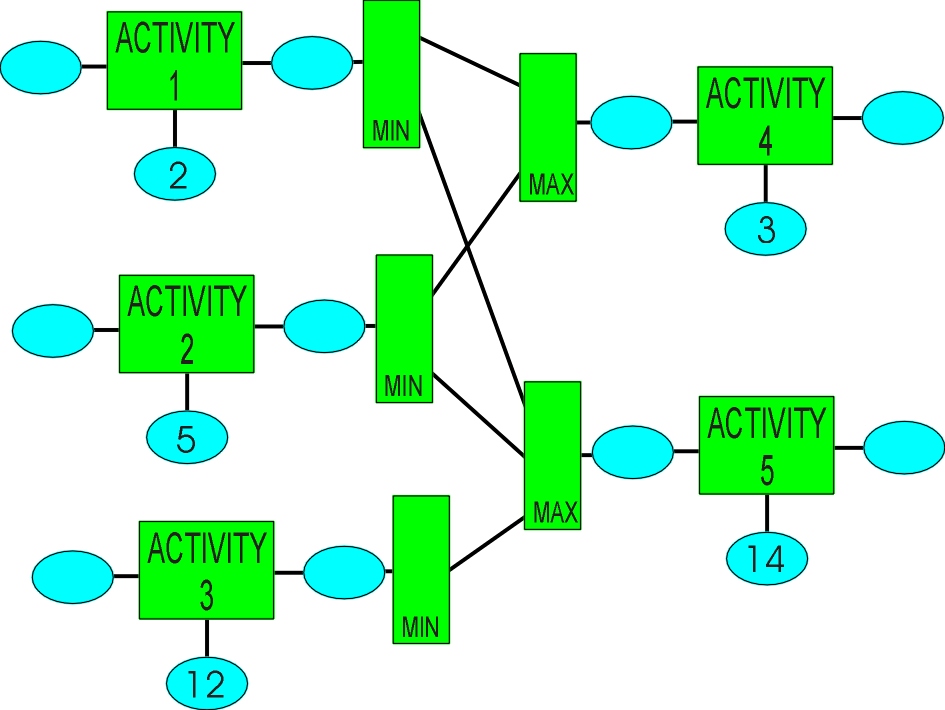

Figure 1 - CPM Structure

It would be useful to support numeric

ranges on the durations and dates, which means we don’t need two passes to find early

and late dates, we can hold information about forbidden periods between those dates, and

small changes not on the critical path stay local, so we change all operators to work with

ranges.

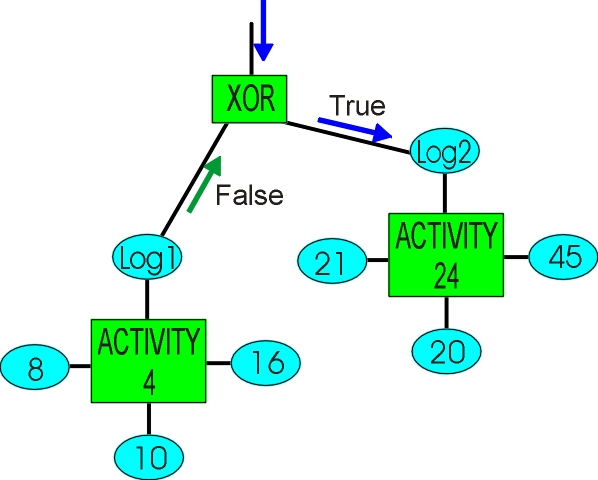

We might have alternative ways of doing things, and we can’t choose between them until we get into the project. If we add a logical connection to the alternative activity, and make it an operator like plus or multiply, we can have the activity sit in a quiescent state. If it finds there is insufficient time to fit its duration, it signals false and another activity is forced true through the XOR, shown in Figure 2.

Figure 2 - Alternative Activities

A Good Application

If one were to look for a first

application of Natural Language Processing (NLP), one would presumably look for:

- Text that has some identifiable and unifying purpose

- A reasonably bounded domain of knowledge

- A small to medium size document with a high value

- Formal, clean and relatively unambiguous text

- A simple basic form

- An application where slow reading time (about that of an

attentive human) would not be a problem

- A long life for the structure that is produced by reading

- Some human assistance to the machine while it is reading the text

would be acceptable

It is essential that the readers of a

specification have sufficient knowledge to understand it. Putting this knowledge in a

machine is a good way of exploring what knowledge is necessary as a prerequisite. Of

course, the specification provides new knowledge, and it will be assumed that the machine

can acquire this knowledge from the specification just as well as a human reader.

Function and Performance

Specifications (FPS) range from a few pages to a few hundred pages – within the range

of machine memory, a few gigabytes. The language is typically clipped and clean. The FPS may be deliberately vague

in how the specification is to be implemented, but should be unambiguous in its

description of what a successful implementation must provide.

The basic statement in an FPS follows

the simple form:

Reading time may range from a few

minutes for a small specification to over an hour for a large one. The project for which

the specification serves as a foundation might run anywhere from months to decades, so

hours spent in machine reading the specification and validating its structure should not

be an embarrassment. The reading system can be used through the requirements elicitation

phase, so the prerequisite knowledge will already be available for the

specification’s first draft.

Somewhere to Go

NLP sounds worthwhile, until one asks

what is the result of the processing. A specification describes

relations – those relations may be between logical states, existential states, time,

or objects or relations. It seems that all those relations should be represented in some

way. Herein lies a problem. Most people are familiar with

propositional logic – A implies B. Some readers may have heard of predicate logic,

where logic links objects. This sounds as though it would be suitable, until one is told

that predicates may not be parameters of other predicates. That is, a statement such as

“The equipment shall allow

trainees to perform diagnostic tests.”

cannot be

represented in predicate logic, as “perform” is itself a predicate (as are

“diagnostic” and “test”). Higher order logic, which does allow

predicates as parameters of other predicates, is both “not well-behaved”

[Wikipedia-higher order logic] (a polite mathematical term for unusable) and opaque. A

paper by Cant describes an approach to program verification using one form of higher order

logic. We see little merit in a person who may be unfamiliar with the subject matter of a

specification turning it into something opaque to almost all users, while crushing the

logic in the specification to satisfy the demands of a limited logical language. Instead,

we should be seeking to turn it into an internal representation – a model - which is

at least as powerful as natural language. There has been considerable work on

representation of knowledge – much of this has been an attempt to turn text into

diagrams for humans to peruse, such as the work of Sowa - rather than for a machine to use

for its own purposes. A machine reading text needs a structure in which states and objects

can propagate – an active structure – rather than a diagram. A diagram is

necessarily a simplification – there may be thousands of connections on one object,

and millions of connections overall, which would overwhelm us. We want the machine to

assist us in dealing with the full complexity of the problem, not with small aspects of

it, which we can manage quite well on our own.

How Hard Can It Be

We know the upper bound on the

complexity of reading specifications – the same upper bound that we are trying to get

around with the provision of NLP on an FPS - no more than six to nine pieces of

information in play at once. This suggests it may not be as hard as we would like to

think. Some of the problem areas:

Packed Noun Phrases

A specification will typically include

noun phrases up to eight words in length. The noun phrase needs to be picked apart and

built into a structure which honours the relations involved. An adjective may relate to

the word immediately to its right, or to the word at the end of the phrase. Some

transformative relations cause a new object to be created, and this new object becomes the

target of relations on the left. An example is “virtual vehicle”. A vehicle is a

physical object with mass and extension – a “virtual vehicle” exists in a

computer – it may be an image or a database cipher, with no mass. The combination of

the words creates a new object, to which other words in the same noun phrase refer. In the

worst case, choosing the appropriate subject and relation for each word becomes a

dynamically constructed constraint reasoning problem.

Prepositional Chains

Prepositional chains (we include

participials and other “stuff” caught up in the chain) carry a large amount of

the semantic content of a specification. A well written specification may have a chain

limit of four or six, a heavily amended contract may have

twenty. While the chain is short – one or two prepositions – it is relatively

easy to unravel. As the chain lengthens, it becomes increasingly difficult to be certain

as to which object to its left the preposition refers, until it becomes a hypothesising

“best fit” problem using relation modelling and chain decomposition rules.

Self Reference

The specification may refer to itself,

or to other documents. A document structure needs to be built at the same time as the

document is being read, so document references can be resolved. The internal reference may

be vague – “below” – or seemingly precise (in clause 1.2.4, a

reference to “the restrictions on performance in 3.2.7.a”) – the actual

meaning can’t be known when the reference is encountered, so fix-up may be required,

and the restrictions may have moved

to 3.2.9.c, so the system must validate every reference. There are layers of fix-up jobs

– some being scheduled at the end of the sentence, such as anaphora in the sentence,

some at the end of the section, or at the end of reading. This is no simple scheduling

task – a subtle modelling resolution may mean bringing forward anaphora resolution,

and that may require bringing forward a modelling resolution elsewhere. Having something

as a base that started out as a scheduling tool is no bad thing.

Defined Terms

Even a small specification will define

terms. There are various methods – an acronym in brackets, a quoted and initial

capital form in brackets, use as a heading, a fully capitalised form, sometimes a formal

textual definition, where the creation of the relation (“is defined as”,

“shall mean”) also creates the dictionary entry. Sometimes the defined term is

one word and left

uncapitalised, meaning it should overlay the word in

the global dictionary. The defined term may be used before it is defined, and it can be

difficult to tell whether the defined term is meant. Sometimes the term is defined and

then used a few words later in the same sentence. The use of the defined term may be

bounded to a section, to prevent collision with the same term used with a different

meaning in other parts of the document (another reason for building the document

structure). The likelihood of encountering internally defined terms forces the use of

dynamic methods – a local dictionary, rescanning, fix-up.

Ambiguity

A specification is notionally

unambiguous, but that is only to someone who has the knowledge to understand what it is

saying. Some of the causes of ambiguity:

A word can

be a noun or a verb – costs.

A word can be a subordinate conjunction or a preposition – as.

A word can be a participial, an adjectival participle, a verb or part of a verb with a

distant verb auxiliary – delivered.

A coordinate conjunction can group adjectives or objects (Jack and Jill) or relations

(… shall repair or replace the instrument) or join clauses. The conjunction may

assert simultaneity or sequence.

Anaphora provide

These things taken together, many sentences can be ambiguous, or at least need hypothesising about several meanings to resolve them to the most likely one. Sometimes a singular meaning cannot be resolved, and multiple meanings need to be carried forward. A bounded domain of discourse helps – the “flight” of flight controls of an aircraft is unlikely to have the same meaning as flight risk for a criminal. The bound reduces the number of meanings that need to be modelled, but multiple meanings are still a prime cause of ambiguity.

The Woods for the Trees

In long sentences it is easy to get

bogged down in the detail and not see connections at long range. An outline of the

sentence is constructed in parallel with the parse chain, with only the components that

determine the “shape” of the sentence – verbs, clause introducers such as

subordinate conjunctions, relative pronouns, etc. This outline is used to determine the

context of various objects – participials, noun/verbs, conjuncted infinitives. An outline of the sentence

allows more complex forms to be handled analytically, still with a backstop of

hypothesising.

Hypothesising

In constraint reasoning problems that

occur in design or planning or scheduling, a system will often need to try things out and

observe consequences at long range, analysis by itself being insufficient to supply a

solution – a simple example is placing eight queens on a chessboard [Wikipedia –

eight queens puzzle] so they do not attack each other (this example, as with most

constraint reasoning problems, is static – everything is known about its structure

beforehand, to the point where the problem can be compiled). Reading sentences is no

different to other constraint problems where analysis cannot be used – “it could

have that meaning, which would have the consequence of… - no, that can’t be, it

must have a different meaning”. The system needs to stop building analytically, and

start building hypothetically, undoing connections if it doesn’t work. This is

constructive hypothesising in a dynamic structure – something gets built or destroyed

– rather than choosing objects from a set and propagating them in a static structure.

A reading system needs two different

approaches to hypothesising – one approach is where it tries different possibilities

to forward the building of structure, only one of which will lead to a full build. The

other approach is where several paths will lead to a successful build, and it needs to

choose between them based on “best fit” – which alternative does less

grammatical damage, or more closely follows sentence order (an unreliable guide, but

sometimes the only one) or disrupts the knowledge modelling less (sentences like this

should be encountered rarely in specifications, but also indicate the range of abilities

needed in a reading system). Where hypothesising is not successful, it can be attempted

again after more is read – another fix-up job to be automatically scheduled.

Various Forms of Logic

A specification will usually have very

little propositional logic – it may only be that the sentences are notionally

“ANDed” together, with “if…then” used to describe a temporal

relation. There will be a smattering of existential logic – “It shall be

possible…”, “the labels can be easily read”, “the system must be

capable of….”. Some temporal logic –

“when…”, “…prior to…” or “… an intermediate

stage in…”. Operations on sets – “at least

one of each…”, open sets “including but not

limited to”. Some number handling (surprisingly little, given the educational

emphasis on arithmetic and algebra). And the dominant form – relations on relations

and objects. What is interesting is that there is this jumble of logics, with almost no

attempt to teach the users of specifications about them, perhaps because teaching them

separately would lead to confusion rather than understanding. If we are to model

specifications, then all of these logics need to be captured in a synthesis, not viewed as

separate and unconnected frameworks. The synthesising element we are using to represent a

relation is shown in Figure 3.

It has an object (ToSell) attached to

an operator (RELATION3), the object providing a connection for inheritance and as a

parameter of other relations, and the operator providing connection for logical and

existential states, and the parameters of the relation. The operator manages the relation

between logic and existence – the logical state cannot be truer than the existential

state – if Leander couldn’t swim, then he didn’t swim the

There seems no need to differentiate

between objects and relations – “He processed the film. The process took

hours.” – except that relations have parameters,

which can be other relations. Conceptualising relations as objects allows their properties

to also come through inheritance, simplifying and unifying the knowledge structure.

Figure 3 - Single relation

Figure 4 - Multiple relations

How Useful Is Grammar?

Most of the work in NLP has been

devoted to an approach that is driven by grammar, such as the work of Pollard. A

multi-step pipeline process is typically used, in that words are turned into parts of

speech, the string of parts of speech is parsed, and the parse structures are used to show

the form of sentences. Many words can be multiple parts of speech. Tagging of words for

parts of speech can present statistics for the likelihood of a word having different parts

of speech, statistics which are usually based on adjacent words. Sentences of complex text

are full of little asides and prepositional chains, so adjacent words may not be semantic neighbors. Some examples:

“It shall in the interests of fairness exclude…”

A specification may be describing

something that has not been described before, so a statistical approach based on similar

text may not be useful in capturing the meaning.

Parsing a complex multi-clause

sentence is much like developing a mathematical proof – once developed, the steps can

seem obvious and inevitable, but the development process involved a great deal of jumping

about and surmise. Of course, the system being described here uses pruning of alternative

parts of speech based on neighbouring words – the difference is that pruning is

undone and redone if the chain of words is rearranged, say by cutting out an adverb, or a

prepositional phrase between a verb auxiliary and a verb, as in the examples above, or by

the insertion of an implied object, such as a relative pronoun. In some cases, such as the

prologue or epilogue for an embedded list, the semantic neighbour of a word may be

hundreds of words away, and requires special machinery to make it appear as a neighbour.

Further complicating the problem of

using grammar by itself is that the writer expects the reader to understand the semantics

of what is written, so filler words can be left out. As example:

He left the engine running in the

rain.

He removed the maintenance training from the project.

He upbraided the child playing in the drain.

He wanted the engine running by the weekend.

If we accept that a combination of

grammar and semantics is required to understand the structure of a sentence, before its

transformation into an internal structure, then the problem arises of representing the

necessary knowledge and, crucially, ensuring the representation allows merging of new

knowledge brought in by reading the specification. An ontology [Gruber] is a currently

popular way of representing knowledge, but ontologies as they

are usually implemented are limited to inheritance and attributes (with links of IS_A and

HAS_A), and their structure is static, with no concept of self-extension through the

reading process. A specification describes the relations among objects (“shall allow,

perform, conduct, depict, navigate”). If we allow the

generality of relations instead of the limitation of typed links, there are thousands of

relations used in specifications, many with multiple meanings, and many which are

synonymous through rotation or reflection of their parameters (“buy” and

“sell” are mapped synonyms for each other). As a further example, one would need

to provide mapping for various meanings of a relation such as “omit” (he omitted

the data from the report, the report omitted the data) and include mapping to relations

such as exclude, not include, leave out, not put in, edit out, redact (the mapping of

meaning among relations is one reason the knowledge structure is so dense, daunting unless

the domain is bounded). People are taught a little about synonyms,

and left to intuit the rest. We have to understand all that we were required to intuit

about language, to teach a machine. It is possible to enable a machine to use induction on

examples, but it would make many errors before its modelling came up to a high enough

level of reliability. Reliability is a subject little touched on in the reading of

specifications, but if it were to be specified, across the transformation from text to

internal representation, and then in the thousands of relations in a specification, each

with several up to several dozen meanings, and with potential error sources also in

propositional, existential, temporal, and locational logic,

what would we say? Our current goal is to achieve 99% overall, and the only way that can

be achieved is to use every possible piece of information, letting nothing slip by or be

thrown away.

The upshot is that grammar is used as a helper of the system, not as exclusive arbiter. It is a far more detailed grammar than people are taught, with approximately five thousand patterns. Operation of the grammar is interwoven with semantics. It can be used to eliminate structures which are ungrammatical or to make explicit some objects which are implicit in the text. Some statements in specifications will be ungrammatical, and can’t be changed, so some tolerance for error is necessary – for example, the system fixes up apostrophe errors without complaint.

Statistics of TestBed Specification

A small FPS was used to develop and test the reading system – it had already been developed by reading emails, as a reading system built against only one type of document would be very brittle. A specification has certain characteristics – such as a fondness for lists in sentences – that needed strengthening.

Lines: 180

Headings: 40

Clauses within sentences: 230

Longest prepositional chain: 4 prepositions

Longest noun phrase (excluding article): 8 words

Several terms are expected to be known before document is read

Most are introduced by appearance in heading before use in text

Some by full name and then acronym in brackets, acronym used from then on

Some by full capitalisation

One (without capitalisation) introduced by a formal definition

Use of bulleted lists within

sentences: 4 times

Depth of nesting: 2

Two to external documents, one to “above”, four uses of

“following”.

Use of parentheses (other than for acronym definitions): 3

Use of quoted text (implying special meaning): 3 (nouns and verbs)

“and then” appears twice.

“and” in the sense of “and then” between

relations which are necessarily ordered in time (such as “create and destroy ”)

appears fifteen times.

Slash used as a short range conjunction: 5 times

“It”, “they”, not used.

“that” used as a noun determiner 5 times (with the

object name repeated, making anaphora resolution trivial).

“not only…but also” appears once

Once the text is converted into structure, it becomes easy to provide diagnostic tools to search for inconsistencies, ambiguities, general statements which clash with specific statements, actions without reciprocal actions ( one can log on, but no means is provided for logging off). The structure also allows searching with free text queries.

When one observes the

misinterpretation of a specification given the slightest ambiguity in the text, or the

narrow focus which excludes germane information on a different page, the ignoring of any

statement that does not use “shall”, or the desire to cut a specification into

ever smaller pieces in the hope that this will provide a holistic view, a system which

provides support for reading specifications seems essential.

Some engineers trained in Systems

Engineering have been taught that conjunctions are bad, without understanding their role

in building groups, or specifying simultaneous or sequential constraints. They will be

familiar with the Boolean logic of programming, they may have had a brush with

propositional logic, but otherwise their formal logical reasoning is undeveloped, and we

rely on their language skills for an understanding of the interdependencies described in

the specification, when their language skills in comparison with their spatial skills may

well have persuaded them to take up an engineering career instead of a more language based

career such as law.

The human limitation of six pieces of

information in play, the limited logical training of many of those involved in

requirements management and analysis, and the large improvement in understanding that can

come from an accurate visual representation of semantics, would suggest that a tool to

provide assistance in managing a complex specification is necessary.

Conclusion

We are suggesting that the posing of

requirements requires undirectedness, which natural language does very well. The reading system we

have described may be simple minded, but it is capable of reading requirements logically,

without asserting direction. It displays the meaning it is using, or displays to the

writer of the specification that it lacks the knowledge to discriminate among several

meanings, leading perhaps to clearer English that does not bump against the six pieces

limit, a better thought out order of presentation so that new concepts build on concepts

already introduced, and leaving no room for later argument about ambiguity in the

specification – the structure is more detailed and precise than words allow, while

not requiring crudity of expression as the cost of that precision. It provides a holistic

view of complex specifications, assists in knowledge dissemination, and plugs a

significant weakness in the armour of Systems Engineering.

Large well-written specifications have

the aura of medieval cathedrals about them, magnificent, imposing piles, relying on the

intuitive skill of the builder, with no analysis or quality control to support them.

Modern civil architecture with its understanding of mechanics and its analytic tools is

far more efficient in its use of resources, and its constructions are far safer (medieval

cathedrals would regularly collapse in the building stage, just like modern day large

projects). It may be time to bring a similar depth of analysis to bear on the writing and

reading of specifications.

References

J.

Brander and A. Lupu, Is

mining of knowledge possible? Proc. SPIE, Vol.

6973, 697307 (2008); DOI:10.1117/12.774079, Data Mining, Intrusion Detection, Information Assurance, and Data

Networks Security,

A. Cant, Program Verification Using Higher Order Logic, DSTO Research Report

ERL-0600-RR-1992

J-Y. Cras, A

Review of Industrial Constraint Solving Tools, AI Intelligence,