Active Structures Cause Earthquakes

Abstract

An active structure computing paradigm is described. Its attributes are shamelessly copied from the attributes of human cognitive apparatus. Its relevance to areas such as knowledge representation, machine learning, autonomous agents, planning, expert systems, theorem proving, common-sense reasoning, probabilistic inference, constraint satisfaction, natural language processing, neural networks and cognitive modeling are touched on.

Introduction

There are many streams of endeavor in Artificial Intelligence research, but they all seem to ignore a successful cognitive model under our noses (actually, just above our noses). What are its attributes:

Active realized structure Long lived Visibility of structure Undirectedness Extensibility Complex messages Self-modifiability

The only attributes here that need comment are "visibility" and "undirectedness". We seem to have considerable visibility of our cognitive structure to speech – a few words can change almost any of our behaviors. If it is highly visible to outside influence, we could assume it is highly visible internally as well. As to undirectedness, when we think, we don’t always boringly proceed from A through B to C, we can proceed in any direction we wish, we may think in a circle, we can "turn things around in our head", we can work out what currently influences what and have the influence direction reverse a moment later, we can "build castles in the air" that didn’t exist when we started to think about a situation.

Figure 1 – Structure building

If we look at the various streams in AI - knowledge representation, machine learning, autonomous agents, planning, machine perception, expert systems, theorem proving, common-sense reasoning, probabilistic inference, constraint satisfaction, natural language processing, neural networks, reinforcement learning, and cognitive modeling - we see none (with the possible exception of cognitive modeling) that even comes close to the attributes listed. Touching on three paradigms – the Turing machine was carefully designed to show that machines were unsuited to high-level cognitive activity, so there seems little point in following that path. Ontologies have a hierarchical, single parent structure intended for a completely different purpose than that to which they are now put, need to separate objects and tasks, and rely on an algorithm to patch over all the deficiencies of the data structure. Algorithms have a static (unrealized and invisible) structure, which makes them fine for working out prime numbers, but unsuitable for interaction with a changing world. Artificial neural networks lack every one of the listed attributes – what exactly were we expecting to get from them? Many of the paradigms in the AI area seem to owe their names more to marketing genius than any rational or scientific basis.

So how should we implement the listed attributes. We can build an active structure in computer memory out of operators, variables and links. The structure remains in memory, or can be saved to disk and reloaded with all its states intact, and its own states can be used to modify itself with minimum trauma, so it is long-lived. The structure is notionally undirected – messages can flow in either direction in the links (functioning as wires). The variables expose the messages in the structure and permit undirected connection, so we have high visibility. We can propagate complex messages in the links – logical states, numbers, discontiguous ranges, strings, lists, objects, structure – and these messages use states in the structure to phase themselves. The undirectedness and the activity being distributed throughout the structure means the structure is extensible – we can join two structures together and have messages flow between them, so the behavior of the assembly depends on the activity throughout the structure. If we allow the messages to signal operators to modify the structure, we have self-modification..

Why haven’t we done this already? It seems that we looked at the base element of human cognitive apparatus, saw a directed element, and assumed we could build a structure having the same abilities from directed elements, while ignoring the distributed activity, the complex messaging, the back connections and the self-excitation that allow higher cognitive levels in humans to appear undirected. We saw the single firing of the neuron and concluded the message was simple, ignoring the fact a complex ensemble message can be constructed of simple components, their sequence and their timing.

Another possible reason is that there was an attempt at an active structure in the early 80s – Steele’s CONSTRAINT – which ended up suggesting a very unpalatable random excitation to overcome its problems. There were severe errors in the implementation - in particular, it didn’t use logical states in the structure to phase the propagation, so too much was left to the procedures that handled the propagation, and the links were virtual, requiring the messages to be duplicated in the nodes at each end of each link, leading to a highly convoluted, incoherent mechanism.

Other reasons are that we attempt to segment the problem into its parts, and the foundations were never intended for what we are trying to build. Sometimes, segmentation works very well – we look at the beauty of a bird’s wing, think we can’t do that, so we build machines with separate mechanisms for lift and propulsion, and then find we can, and build a helicopter. Sometimes, segmentation destroys the essence of what we are trying to do, so the solution we devise has no relevance to the larger problem or we choose to redefine the problem to suit the solution we have devised, distancing ourselves from any real use. The conceptual foundations for computing were devised with the express desire to show that machines could not engage in high level cognitive activity. We might like to think about whether we too readily accepted the premises of the argument, and still allow them to color what we do.

Brooks describes an active structure used in robots. The structure is quite primitive, perhaps deliberately so – fixed topology network, uni-directional connections, small single-value numbers. To quote – "Any search space must be quite bounded in size, as search nodes cannot be dynamically created and destroyed during the search process". So, poor visibility (this is a map, after all), no undirectedness, extensibility only from outside the paradigm, no self-modifiability, propagation without logical control of states. Such a scheme would seem to have a limited future in machine perception. Humans can get from the object Fred Nerk to his appearance – "Fred has a big nose", we can get from appearance to Fred, when we recognize Fred we effectively replace the visual blob with the object in our map of surroundings and can reason about its presence. That diversity of behavior says either dense back connections and cross-linking on the perception channel or undirectedness is everywhere necessary. The further restriction that all the map must be built of static structure, rather than a structure growing and shrinking as the robot moves (pulsating like a stack, even) guarantees no way forward, just as creating and maintaining all the structure used to represent all the text encountered in information extraction, and all the further structure-building involved, would be impossible, except for the smallest toy. Still, the term "active structure" has been introduced, and "there is no distinction between data and process" may cause some apoplexy among builders of segregated ontologies.

The attributes in more detail

Active Structure

Operators in the structure respond to changes in state on one or more of their connections by either ignoring the change, or changing the states on one or more of their connections, including possibly the connection that had an incoming change of state. By this means, no algorithm is used, the phasing depending purely on the activity in the connections (there is an external system handling the messaging, but it knows nothing about what the messages mean). There are analogues in the structure for all the operations of a sequential machine – an IF...THEN, a FOR loop, a GET/PUT cycle, a sequence. Each of the analogues uses logical states in a structure to control its operations, which may include changing the structure. There is a basic theoretical problem with using an algorithm – a fixed stream of instructions – to interact with a changing world. An active structure, using the states in its connections to resolve what to do, and the environment changing those connections and states – does not share this problem. There are operations occurring in the structure that would make an algorithm outside it and trying to maintain the states in it very unhappy – it would continually find the rug pulled from under it as the structure changed, but it could not communicate these changes because it would be stuck up its stack. As what we try to do becomes more complex, we will finally have to bury the algorithm as a valued helper who brought us a little way but misled us as to direction (while being regularly dug up to do prime numbers).

Visibility of structure

Variables in the active structure provide visibility and points of connection. If visibility implied rigidity, in that the structure had no ability to change itself, visibility would not be a good thing in cognitive applications. A pulsating stack seems to provide fluidity of resource, but with it comes invisibility – the rest of the machine cannot see or influence what is happening on the stack. In logic programming, a seemingly undirected structure is built on the stack, but again it is invisible. An active structure can be visible and have the resources to build on itself and change itself. The FOR loop active structure analogue is a thousand times slower than the sequential equivalent, but is visible to, and can be connected to and interact with, all parts of the machine.

Undirectedness

The human uses back connections and self-excitation to overcome the limitations of directed components. The active structure uses "soft" wires to propagate signals in both directions, removing the need for many back connections. The propagation phasing signal is the logical state, extended to handle error and unknowable.

A message that is consistent with an existing message can be overlaid on it, possibly changing the direction of propagation, while messages that would be inconsistent require killing of the existing message so invalid inferences are not made. A False state propagating in one direction cannot override a True already present in the other direction, but either state can override an Unknowable state coming in the other direction (they are both consistent with it).

Extensibility

If we have visibility and undirectedness, extensibility pretty much follows. Extensibility can mean merging two structures, or putting a new superstructure or substructure on an existing structure, or an operator extending itself. When merging, it will commonly be the case that there is no exact match between corresponding points in the two structures, requiring additional structure to be patched in to allow information to be transformed as it flows between them. Some operators store information, either by adding stub links or by building a special purpose structure. There is no constraint on this, except for the availability of uncommitted structure elements.

Complex Messages

The messages in the structure range from simple logical states through strings and numbers and alternatives, including discontiguous ranges, to lists and objects and structure. The more complex messages are built from the same stuff as the active structure, making it simple to backtrack. Ever more complex messages between operators are handled either by assembling them from components, or using multiple channels.

Self-modifiable Structure

There is a "soup" of uncommitted structure elements which can be taken up or discarded to allow the structure to modify itself. In many places where it is used – self-growth to handle local complexity, for example – it is difficult to think how similar fluidity of operation could be obtained otherwise. We talk about self-modification of the structure, but we are not promising to turn a single operator in the structure into a human being. A structure operator connects numeric variables to a plus operator when it is presented with them in a variable list; a distribution operator uses structure elements for its internal map, an event operator may create plausible consequent events, which in turn....;a parse operator turns a string of words into a noun clause, creates another parse operator to operate on the new symbolization, then destroys itself and the surrounding structure it has subsumed. The self-modification we describe is limited in extent and needs signals from the structure around it to support it, but is valuable nevertheless. It is also backtrackable.

Some other attributes, with no direct equivalence to the human cognitive model, but necessary to provide equivalent properties:

Structural Backtrack

To handle tentative decision-making in planning and other areas, backtracking is used. In other paradigms intended for planning or resource allocation, a new copy of the variables is placed on the stack. This has the obvious difficulty that all the variables must be known beforehand. When the automated planner requires to build a "castle in the air", turn it around, examine where it leads and follow that path, foreknowledge of all the variables is not possible (and the stack would be invisible to new structure anyway). The active structure implementation uses a resource of uncommitted structure elements, and from this is built any new structure or complex messages, and to this resource is discarded any structure destroyed or messages that are killed – we needn’t just build the castle, we can renovate it as well. Backtracking involves storing an image of the element before it is changed, where the image contains all the states, and overlaying the stored image to revert to the previous state. Everything that happens is stored in structure elements, including information about the pool of uncommitted elements, so backtracking on newly built structure is easily achieved. This is also a useful general error handling technique – during data mining, structure and states are altered based on activation by the table record. Considerable processing may occur as the incoming information is driven through the structure. If an error overwhelms the error handling provided by specific data cleaning structure, all the effects of the activation by that record are undone. If no error is encountered, the tentative states are merged with the previously accepted states.

Spreading Activation

Several operator pairs – resource/usage, distribution/correlation – do not rely solely on communication through their connections. A resource operator using connectivity on every time period would need to have thousands of connections, making it grossly inefficient in operation. Instead, a usage operator with a single connection to the resource looks through the bookings in the resource operators for other usage operators which would conflict with it, and notifies them directly of a change in their environment by activating them. If the connectivity paradigm breaks down so readily, can it be any good? You can find out by watching a human struggling with a complex resourcing problem – too many things closely and rapidly interacting overloads them - and falling back on some tunable heuristics to handle it. Not bad, but a machine can do it better.

Instant Topological Change

When the structure is modified and a new link added, there may be information available to flow through the link. It starts to do so as soon as the connection is made. In a simple case of self-modification, handling

X = SUM(List)

when the identities of the members of the list become known, they are connected to a PLUS operator already linked to X through the EQUALS. If any of the variables, including X, are known, information will flow into the PLUS operator and out again on whichever links are appropriate. This is self-modification and undirectedness, and possibly structural backtrack, in action.

Examples

Here are some examples of active structure in action.

Distributions and Correlations

Distributions and correlations are represented in the structure by operators which store information. The operators exploit the complex messaging – the propagation of discontiguous ranges or sets of alternatives – and the undirectedness of the structure. In a Learn state, information flows into the operator from its connections to the structure, and in a Run state, information flows out through the same connections to the structure, illuminating the structure when it has no other input.

Figure 2 – A stochastic analogue of A + B = C

Correlation operators (RELATION in figure 2) provide connections from one distribution to another, so reduction of range in one dimension is reflected in change of distribution in other dimensions. The operators allow the insertion of ad hoc experiential knowledge, or a combination of data mining and operational use, or "learning on the run" from each activation. The combination of distribution and correlation operators provides a stochastic analog of undirected analytic statements like A + B = C, with the logical and state control connections of the operators being the analog of the EQUALS operator.

Resource Usage

To handle resource allocation problems, active structures are built comprising of operators representing activities, usage, resources and variables representing start, duration, finish, intensity of use, existence (an activity can 1) exist; 2) cannot possibly exist or 3) doesn’t exist, even though the structure is there, just as "the man has no legs" means we put the legs structure dark, rather than temporarily destroying it). The usage operators "soft" book resources based on the ranges present on their connections. If a "hard" booking is made, other usages of that resource with which it conflicts are "bumped" from that time period, and other usages on the same activity are also hard booked in that time period. The bumping of a usage on an activity then leads to the bumping of the other usages of different resources on that activity and so on in a cascade. The structure is using its undirectedness because the sequence of who goes first depends on other factors, it is using its ability to handle complex messages, and it is using spreading activation to handle the case where the message is too complex to propagate – the only possible message is "Wake yourself up and look around you". All of this booking and bumping is handled by structural backtracking on the booking slips while decisions are tentative.

Natural Language Processing

This is an area where segmentation reigns, and it is also the area of cognitive activity where segmentation is least effective. It smashes all the directed and static approaches with a few words, because the words have meaning, and that meaning changes the meaning of the following words. Text is all about transferring structure from the active structure in one person’s head to another active structure. People have been building huge static structures to represent grammars [HPSG] or word ontologies [Wordnet] and then finding the static structure alone can’t handle the dynamic complexity, while others attempt to segment the processes involved with simple interfaces passing single values, and then try to limit the area of operation so the performance is acceptable. Natural language requires knowledge to understand what is being said, that knowledge needs to be in a form that can be modified by what is said, and meanings can be highly ambiguous, so that the knowledge has to tolerate ambiguity in its structure and revision when later information invalidates what seemed like the only choice. NLP underscores the attributes of active structure – long lived, visible, undirected, extensible, complex messaging, self-modifying, structural backtrack.

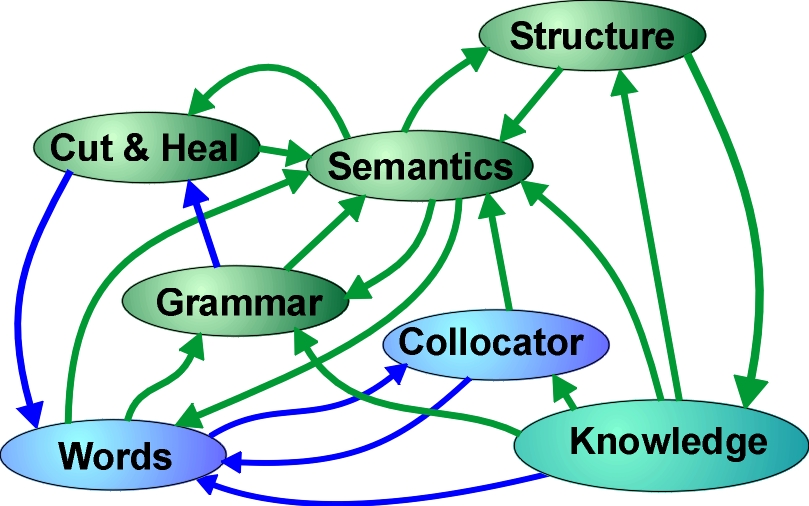

Figure 3 – The active structure processes in NLP

Simplifying what happens for NLP in computer-borne active structure, we take the words and turn them into POS tags – we already have ambiguity (represented by alternatives in the messages). We build grammatical structures – more ambiguity, this time in the structure as well. We build semantic structures – still more. We build event chains – even more. Some of the POS tag ambiguity can be resolved using grammatical rules, some of the grammatical ambiguity can be resolved using semantics, some of the semantic ambiguity can be resolved by reasoning about chains of events. There are multiple overlapping notional processes within the one active structure, each using complex messaging in undirected structure. The processes are simultaneous, opportunistic and synergistic, a shred of information coming available at any level being sent backwards or forwards to resolve ambiguity. At the grammar level, we are using self-modifiability to convert a structure based on words to a structure based on grammatical objects like noun clauses, and to handle the unpredictable propagation delays in a dynamic network structure sitting on a sequential machine. At the knowledge level, we are using visibility to link to the existing structure and extensibility to add new knowledge to the existing structure. We are using structural backtrack to handle the creation and evaluation of tentative structure – to build further layers when we are not sure about the current layer. Finally we may need to build ambiguity into the knowledge structure – Is there life on Mars? If there is then... – and wait who knows how long for the invalid structure to be destroyed. Which of the attributes of active structure we have listed could be withheld and the overall NLP process still be expected to work as we have described? Which part sounds alien - the emphasis on parts of speech sounds a little off, but we humans learnt to handle those informally.

Conclusion

There is a reasonably successful cognitive model with which we are all familiar – why not emulate its attributes to support and amplify the capabilities of its users while shoring up its weaknesses?