Abstract

An automated approach to handling knowledge for a call centre is described, together with some of the problems of such an approach. The system strongly integrates existential, propositional, relational and temporal logic with grammar as a way of reaching high reliability in its operation.

Where a call centre is supporting millions of users and the same textual knowledge applies to all the users (tax legislation, say), it is feasible to produce a great deal of supporting material which covers every nuance of the text. A large US health insurance call centre operates with thousands of different source documents (insurance policies and certificates), each ranging in size from forty to one hundred and fifty pages. There are basic classes of insurance, "el cheapo" plans for a person paying directly, or "Cadillac" plans for employees (it is tax effective to route benefits through these plans rather than pay higher wages). By introducing a variety of special conditions, the payout on the policy can be minimised, with the result that the documents have an uneasy mix of legal and medical terms. When a call comes in, the call centre operator must become au fait with all the benefits, exclusions, pre-existing conditions and current state of the "covered person", so a robust answer can be given to any query the covered person or the health care provider acting on their behalf may raise. If the call centre operator chooses to use an answer they used with a different caller, it may be wrong for the new caller, but the operator does not have time to laboriously read turgid legalese. It may be thought that the problem could be solved by writing the certificates in plain English, but plain English also uses words like "also" and "unless" because of their semantic power – necessary (another example) when something complex is being described. When compared with requirements text, where a new concept may be described in words for the first time and which the reading system may struggle to recognise, the certificate is conceptually simpler, in that it will not introduce any concept that has not existed before (but it may use somewhat opaque language to exclude something that was included before).

We are using a different approach to the conventional "semantic net". The concept of a semantic descriptor would seem to be a dead end for knowledge that requires layering, such as "You are responsible for making the payment". Lieberman et al [1] gives an example of ""playing tennis requires a tennis racket" and shows it conceptualised as [EventForGoalEvent "play tennis" "have racket"]. Our approach is to conceive of relations as objects, allowing the structure shown in Figure 1. The object that is "person plays tennis" becomes the second object of "Person uses racket", with "Person" being common to both. There are more connections, but those connections would seem to make the meaning much clearer, and make it much easier to build automatically from the text – we use the simple rule that if turning the words in the text into objects and linking them together provides an accurate semantic structure, there can be no better way. That means a lot of things become objects – time periods, for example.

Our approach to the call centre problem is to automatically read each document beforehand and store the resulting semantic structure. This structure can be loaded into the machine when the call comes in. When loaded, the structure becomes active, allowing its states and values to be updated (that is, the states and values propagate through the structure) using a database lookup and, together with some other materials, including lists of procedures requiring pre-authorisation, any query which requires access to knowledge held in the certificate can be answered.

There are various problems to be overcome – we provide examples from a sample Health Insurance Certificate [2].

Contractual documents over about ten or fifteen pages use defined terms – terms whose definition appears in the document. The definitions may be grouped at the start of the document, and/or may be strewn throughout the document, and/or may be found in a glossary. Even with a glossary it is convenient to define something on the spot if it will only be used once.

Some definitions are specific to a particular section of the document, so that words can have different meanings, depending on where they appear in the document. This complicates the answering of questions. Defined terms used in the document will usually use a different font or have some other way of recognising them (which may not be reliable – a capital first letter say, but the ordinary word may start a sentence), but this will not usually apply to questions – the person would need to be intimately familiar with the document to know all the defined terms, so the machine must analyse the question to determine whether a defined term is meant.

There will often be errors in the defined terms - as an example:

Home health care agency means a home health care agency licensed by the Texas Department of Health.

The object in bold italics is defined in terms of itself – the plain italics represent a use of the defined term in its own definition.

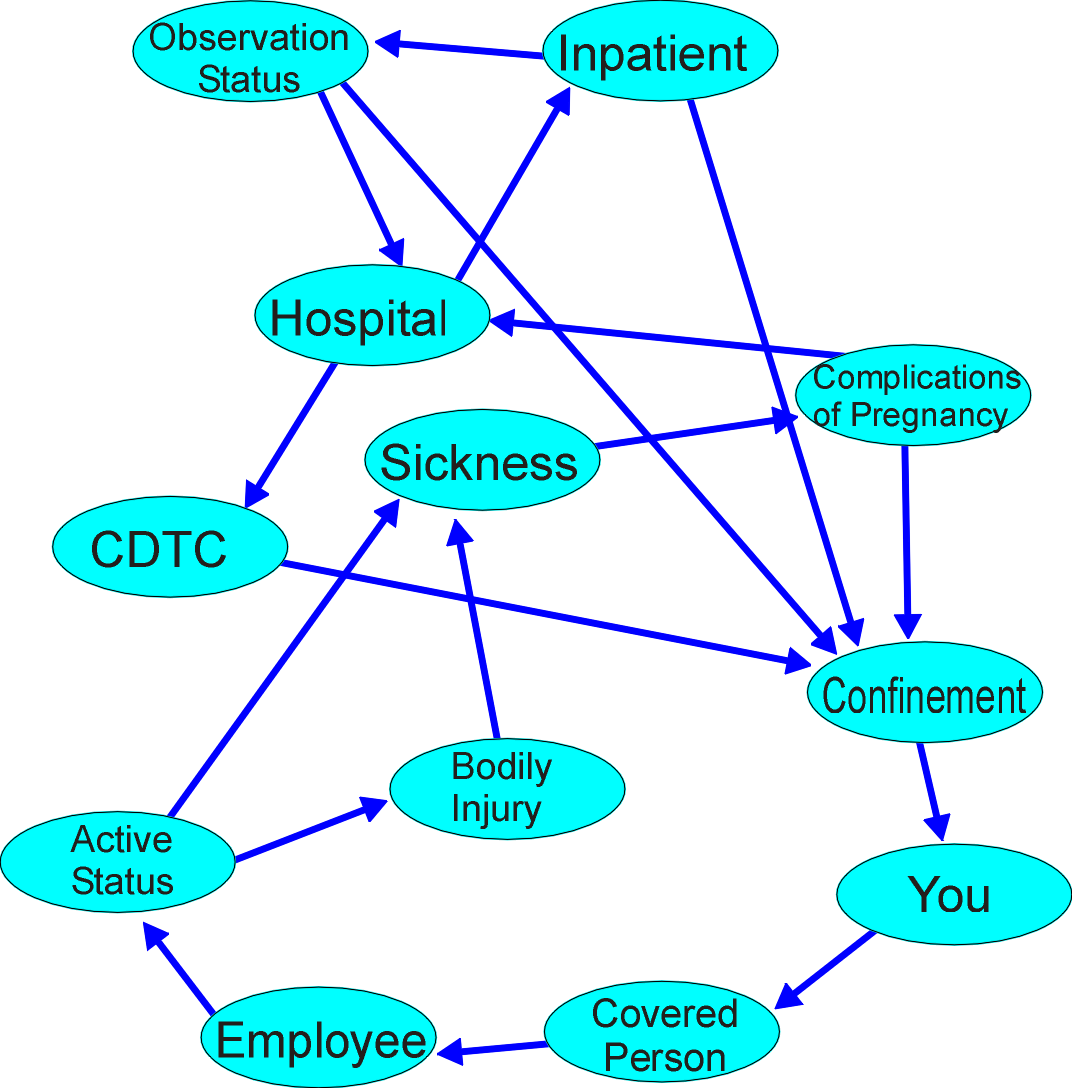

As is typical of dictionary definitions, many of the definitions are circular – a policy is defined in terms of a policyholder, and a policyholder is defined in terms of a policy. Figure 2 shows the loops surrounding the definition of Sickness. The system searches for the order which involves the minimum of not yet defined Defined Terms and terms with multiple meanings.

It is common for a definition to capture a group of objects, such as Advanced Imaging –

Advanced imaging, for the purpose of this definition, includes Magnetic Resonance Imaging (MRI) … and Computed Tomography (CT) imaging. (CAT is also in common use – the system had better know this)

The system places Defined Terms in a Local Dictionary as their definitions are encountered, together with necessary control if the definition is limited to a section of the document. The Local Dictionary is searched before the Global Dictionary when a word is encountered. This introduces the notion of "fixup" – the definition may be in the sentence and only found in the Global Dictionary when the sentence was first read, or it (almost) immediately follows, such as

We calculate deductibles amounts by applying the dollar amount to the net charges. "Net charges" are defined as …..

and then "net charges" is added to the Local Dictionary in the following sentence (notice the problem with the capitalisation of N in Net – usually a quoted definition of a defined term is precise about its capitalisation), which means revisiting the previous sentence with a new meaning for a word combination, whereas the two words were separate objects before – the reading system is continually scheduling jobs for itself – jobs that may be performed after reading the sentence, the section, the entire document (the job may be performed hours later on a large document, or a later job scheduled for earlier execution may remove it). To avoid searching on every term to see if any of its parents or group headers is a Defined Term, we place the child objects directly in the Local Dictionary. This means MRI will be caught immediately, allowing us to look for conditions applying to Advanced Imaging (this is a good example of why key word searching is not very useful in large, highly structured documents). This brings up the problem of conditional Local Dictionary entry – the conditions for a defined term may be complex. If we are handling questions, and the word appears both in the Local and Global Dictionaries, we have to treat it as having two meanings, and resolve which meaning is meant.

Some defined terms, while not quite defining black as white, may surprise the unwary:

Bone marrow means the transplant of human blood precursor cells… (why doctors would look askance at such a document – the lawyer has trampled all over medical knowledge to suit a legalistic purpose).

Bodily injury means bodily damage - except that some bodily damage, such as muscle strain, is defined as sickness, allowing strong statements about bodily injury.

A large document will have sections and subsections. In some documents they are numbered, allowing the precision of:

5.7.3.4. The On-Board Audio Announcer shall provide …as described in clause 7.10.3.19.3.c.

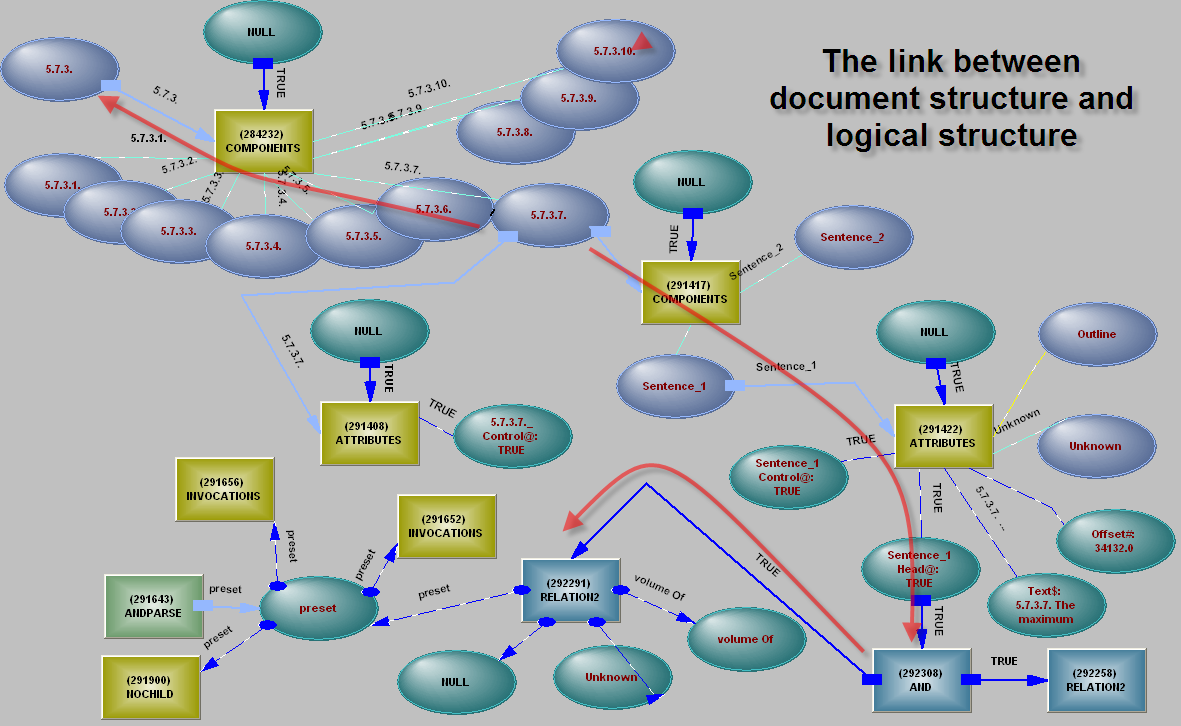

Note that the reference is forward – clause 7 has not yet been seen, so a job is logged for when 7.10.3.19.3.c is encountered, or if it is not encountered, an error is reported at the end of reading. Figure 3 shows the structure that is built to match the structure of the document – the system has to be able to find its way from one relation to another, both through the semantic structure and through the document structure. The document structure also delivers the existential state and the propositional state of the discourse, and allows such statements as "Section 9 is void under the following circumstances". The certificate does not use section numbering – instead it relies on a hierarchy of headings, so there is a structure

ELIGIBILITY AND EFFECTIVE DATES

Effective date

Employee effective date

REPLACEMENT OF COVERAGE

and the elements of the structure can be referenced as "The benefits outlined under the "Schedule of Benefits – Behavioral Health", where a subsection is referenced. There is clearly an object being referenced, an object that can encompass or contain thousands of relations built by hundreds of sentences, and the object can be controlled existentially and logically.

An automated reading system can be expected to be precise and accurate. While this is good for responding to questions, it is not so good for reading thousands of documents. Each document will have some errors – if the system gags on every error, the error is corrected by hand and the system starts again at the beginning of the document, it would greatly increase the reading time. An alternative is for it to work out what is probably meant, and continue with a warning. Trivial errors, such as guaranteed spelling mistakes, double commas or a messed up "it’s", are fixed without comment. Someone should glance through the warning file, and only correct errors where the system guessed wrong. In the example certificate, there would be warnings about:

?Improper use of defined term – not shown in italics

?Self-referential definition

?Missing indexed list item

?Erroneous grouping head – "and" should be "or"

The similarity with compiling a program is obvious – there are also some errors which are unrecoverable. Most people ignore compiler warnings – if it seems to work, that is good enough. People are likely to do the same with warnings from an automatic reading system – we are likely to need another system to automatically read the warnings and work out how to avoid them.

Why can’t the system overlook the errors? – it will give wrong answers, and if it is showing context it will dump the call centre operator at a point in the document where they will be confused. If it is rolled out as a web service, the customer or health service provider will have neither the time nor the inclination to work out what is wrong, given that the source of the error may be a hundred pages away (but just a link away in the semantic structure), and a medical specialist would recoil at the way the terms are defined and used.

Just as the discourse object provides existential and propositional states to the sections of the document, and they provide existential and propositional states to the subsections or sentences, some relations, such as "fail" or "think" or "said" provide existential and propositional states to the relations they control. Some of these states have full force, while others can be invalidated without error. For "The date you fail to be eligible under the policy", the ToFail relation receives a logical True and sends a logical False to the ToBeEligible relation, a state that cannot be overridden. For an example like

John thought the man was guilty. The man was innocent.

the first sentence receives true existential and propositional states from the discourse. The ToThink relation passes those states to the ToBeGuilty relation. The second sentence invalidates what John thought, without causing an error.

The existential connection becomes important when the propositional state is false.

He failed to prevent a riot.

The statement implies that he was capable of preventing the riot, otherwise it should have been phrased differently. The ToFail passes an existential True to the ToPrevent relation to represent this implication.

The system has to build object groups as it encounters them. Some group inclusions can be conditional, such as

Covered expenses include:

Diagnostic follow-up care related to the hearing impairment screening for a dependent child from birth through 24 months old.

Surgery, including anesthesia.

Note that some types of surgery are elsewhere excluded, so it is not sufficient to find that covered expenses include surgery.

Questions can be posed that return:

| a document context – "show where prolotherapy is excluded" | |

| an explanation (using context) – why is the copay $3,500? | |

| an object – "which is the primary plan?" | |

| a logical state – "is prolotherapy covered?" | |

| a dollar amount – "for heart surgery costing $53,000, how much is the copay?" | |

| a time – "when will I be eligible for the full benefit on a kidney transplant?" |

and any combination of any of these. For questions that return objects, values or times, the context is also shown (that is, the statements that generated the semantic structure which provided the answer). If the question is difficult enough, the answer may involve large slabs of the document. If a dollar benefit is being returned, the system needs to check that the procedure is covered, and does not need pre-authorisation.

The technique for handling these questions (except to show context) is to turn them into a statement using patterns for interrogative grammar (turned off when reading the document), and then search on the relevant object, state or value. See Figure 4 for a simple example (this is the way we see knowledge – a structure that can be searched on any connection in any direction). Searching for any of them may mean repeatedly moving between the existential, propositional, relational and numerical subnetworks, and sometimes hypothesising and pruning of a set of possibilities. If a value such as copayment is searched for, the searching begins in a numerical network that links the numerical attributes of objects – copayment is an object, its value is an attribute. The object whose attribute is sought cannot be null, so searching in the numerical network will usually lead into the object network, and the question may be answered by a denial of benefit rather than a value.

The structure of the question is built directly onto the semantic structure that was built from the certificate, using the same dictionaries. Searching is simplified because of the direct connection of the structure initiating the search with the structure being searched. If the user has supplied information as a prologue to the question, this is added to the structure when the question is built and energised by the appropriate logical states for the question discourse. After each question, the original structure can be reloaded, or the changing structure can function as a dialogue, with the topological and state changes caused by earlier questions retained.

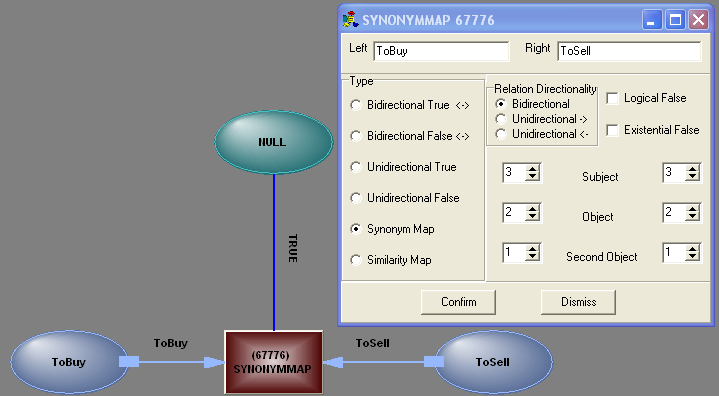

The system is using semantic search as it builds the semantic structure from the certificate, but it is used intensively when answering questions, with the search precision somewhat relaxed to allow for informal language. The system uses synonyms and antonyms, but they are a fairly trivial part of its navigation through the structure. Of more utility is mapping between relations, such as

John sold a car to Fred.

Fred bought a car from John.

The mapping is shown in Figure 5. There can be mapping between different meanings of the same relation.

John omitted the data from the report.

The report omitted the data.

The second object of one meaning, coupled through a preposition, becomes the subject of another meaning of the relation.

A complex technical definition will use multiple relations, in the same way as a defined term uses multiple relations in its definition – a simple mapping between two relations is inadequate. An example is ToMetastasise, shown in Figure 6.

Call centre support or web-based access to an intensive knowledge environment requires large computational resources – we estimate 30,000 computer hours to read 5,000 certificates, resulting in uncompressed memory images consuming a terabyte.

The call centre operator’s task is difficult and stressful – the operator must ensure the answer is robust, as a wrong answer may throw the caller into bankruptcy or cost the insurer tens of thousands, yet the operator must disengage quickly and move on to the next call. Precise and accurate specific knowledge that is rapidly accessible through a natural language interface should assist in reducing the stress and the errors.

[1] How to Wreck a Nice Beach You Sing Calm Incense. International Conference on Intelligent User Interfaces, IUI 2005, January 9-12 2005, San Diego. Henry Lieberman, Alexander Faaborg, Waseem Daher, Josť Espinosa.

[2] Health Insurance Certificate – retrieved from www.nisd.net/hr/benefits/insurance/docs/2010/HDHP-CHC.pdf

Figure 1: Person uses racket to play tennis

Figure 2: Definition of Sickness

Figure 3: Document structure

Figure 4: Conversion of an

interrogative form to a searchable structure

– searching on the existential connection – ToDo will match with all action

relations, as will "it"

Figure 5: Mapping between ToBuy and ToSell

– a symmetrical reflection, with the subject on one side mapping to the second object

on the other

Figure 6: Definition of metastasis

Relations which involve movement, such as ToMove or ToSpread, have an object or second object in the form of a FromTo – the capture of two locations in a single parameter.