Active Structure in Law

Abstract. Active Structure is a paradigm intended to fully represent knowledge, including the meaning of dense and complex text, in a structure which is active, visible, undirected, dynamically self-modifiable and capable of structural backtrack. Various ways in which the structure mirrors the complexities of legal text are described.

Keywords. Active Structure, undirected, knowledge activation, logical structure, existence, relations, meaning, self-modifiable, structural backtrack

Introduction

There are many methodologies and techniques used in an attempt to represent some part of the meaning of dense, complex texts such as are used in the areas of law and science. Each of these techniques addresses some small facet of the problem, yet a legal document needs only a few lines to include most of the difficulties of its representation - dynamic definitions, intention and causation, implicit logical structure, generalisation, dynamically determined states, connections among objects at long range. For many of these existing techniques, the user faces a formidable, and ultimately futile, task of interpretation - how to convert a document written in free text to a formalised system which may support perhaps a thousandth part of the meaning of the document, without losing so much that the resulting representation is of marginal value, or by operating within the constraints of the transforming process, introducing new meanings that do not exist in the text, as artifacts of those constraints.

This paper will describe an active structure approach, one where the text is automatically converted to its underlying structure while conserving its meaning. The target structure is actively involved in the conversion process, eliminating the conceptual boundaries required for the usual approach of problem decomposition. There is too much that is different in the paradigm to cover here and the workings are too complex to describe in minute detail, so only vignettes relevant to the application to legal text will be described. An introduction to the paradigm [1], and a comparison with other paradigms [3] may help to understand how what follows is possible. In short, the paradigm is the antithesis of the algorithm - static structure approach. The structure may be in stasis when it has nothing to do, but it is never static.

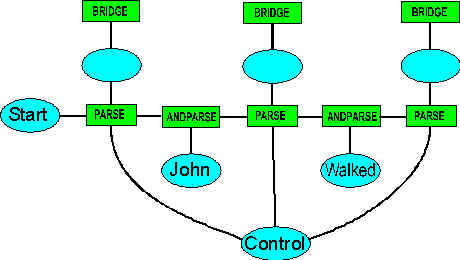

Figure 1 - Fragment of parse chain

1. The Main Processes

The main processes of text transformation are tokenising, parsing and structure building. Tokenising is dependent on the structure and interacts with it, but the parsing and structure building are tightly interwoven, so it is not possible to consider them as cognitively distinct processes, although of course we will describe them as though they are. The processes are automatic.

1.1. Tokenising

This is conventional, except that the tokens are already objects in a structure, acquiring both grammatical and semantic properties through connection. Many words can represent different parts of speech and can have multiple meanings - all of the properties of these different parents are acquired at this point, to be pruned as parsing proceeds. The tokeniser may also assert something cannot have a particular parent. The system has approximately twenty thousand words and unconditional collocations in its starting dictionary, with a larger, but less well-defined, vocabulary provided from Wordnet[4]. Words defined in the document, either through a formal definition or quoted in parentheses, are added to a local dictionary as they are encountered, together with their position of first use in the logical structure of the document (and their scope, if given, as "In clause 15.a, "hazard" shall mean...").

1.2. Parsing

The words that make up a sentence are built into a parse chain of the objects the words represent, with parse operators between them. Figure 1 shows a fragment of the initial parse chain (the links to the hierarchical grammatical and semantic knowledge structures are not shown). Objects representing the words are linked to operators which maintain hierarchical consistency across themselves (a participle is consistent with a present participle), and the alternatives are propagated to parse operators, which compare the alternatives across them with the available grammatical structures. If no structures are found for some alternatives or inconsistency is forced on some combinations (an article preceding a verb), the alternatives are pruned and the new subset is propagated back from whence it came (the links are undirected). The result is that pruning initially occurs only at neighbouring words, but can propagate along the chain in either direction. After this phase has settled without error, the control line to the parse operators is activated and the remaining alternatives are propagated to the nodes shown above the parse operators. When the alternatives reach the bridge operators, building of the parse structure commences. Grammatical rules are represented by structures, and the bridge operators attempt to attach copies of them to the initial structure. If they succeed, the additional structure introduces new objects and new bridge operators into the structure, which then allow other structures to attach. Phasing of operation uses connection - some rule structure may require more information than is currently available, so the bridge operator throws out connections where information is needed and waits to be woken by the inflow of information (the particular operator may be swallowed up by structure building from another site in the structure, and thus never wakes).

Figure 2 - Parse Structure

While the parsing proceeds, it throws out higher level symbols such as noun phrases, prepositional phrases, clauses. As soon as one of these higher level symbols is detected, the semantic structure building process continues, effectively in parallel with the parsing. Semantic structure building may also result in reorganisation of the parsing structure - a process called cutting and healing. Some part of the structure can be severed and relocated, with changes of state resulting across the new connections as they are "healed". In certain circumstances, the parser may wish to hypothesise - that is, build structure and observe the results before proceeding or trying another alternative. Structural backtrack, where structure is built and then undone, allows this. Figure 2 shows the result of parsing a reasonably complex sentence.

The grammar is active and impure, in that parsing structure is built based on both grammatical and semantic alternatives flooding through the structure, and the parsing structure is built in parallel with the semantic structure. Its impurity mirrors the amazingly flexible theoretical mess that is natural language.

1.3. Semantic Structure Building

Semantic structure building began before parsing of the sentence - the sentence itself is an object, and it is part of a clause, which is part of a discourse. The discourse has logical states (truth and existence), which are passed down through the clause structure to the logical structure of the sentence. The sentence also has attributes - the text that created the structure, and the currently unknown objects created by the sentence.

As soon as a noun phrase is detected, it is examined. If the token is a simple object, nothing happens. If the token is an object that indicates the presence of a relation - a word such as "owner"- a relation is created from it, with in this case an unknown object of the relation (we will leave the description of relations until later, but for now, relations have logical and existential as well as object connections). If the noun phrase is compound - say "building owner", a connection is made between building and owner, and the unknown is removed - we know what the owner owns. "Building" has two meanings, the process and the artifact. The connection to the ownership relation forces a relocation of the connection of the object into the hierarchy. It is common for generalised terms to be used - linking two objects in the noun phrase will often force more specificity on the hierarchy connection. In fact, a compound noun phrase is treated as though it contains implicit prepositions between the objects, and the general preposition mechanism is used to unpack it.

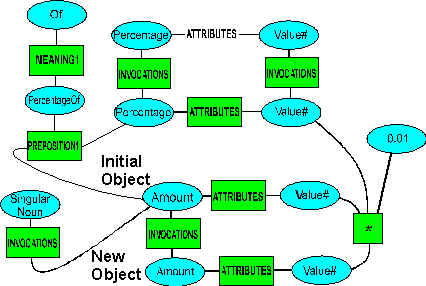

The next symbol detected in legal text is likely to be a composite noun phrase, one made up of noun phrases and prepositional phrases. Prepositions are extensively used in dense text, for their powerful ability to create microcontext. If legal text is to be handled, then prepositionals need to be handled well. Maps are used to establish the ways that prepositionals can link the objects that noun phrases represent. Prepositionals are one of the greatest obstacles to someone attempting to represent the meaning of text in a static structure. Their diversity ranges from the lowly "of" - "a week of delay" - seemingly only able to handle simple connection, through "for", with its ability to create cyclical logical machinery out of thin air in "for each period of six months until termination", to "by", with its ability to spin a verb phrase around and attach a subject. There are approximately fifty prepositions, and each preposition may have from two to twenty maps. These maps are used, first to select the appropriate meaning based on the objects it must connect, and then to guide the structure-building process. The maps themselves are active, in creating and pruning possibilities to determine the meaning or the objects to be created. As new connections form, so the properties of the objects that parsing is handling change while the parsing proceeds. A sample preposition map is shown in Figure 3.

Figure 3 - Map of Percent Of Amount

This map matches against the existing structure, then has directives within it to build new structure, including computational machinery where required (the multiply operator), and new objects, one of which replaces the incoming object in its position in the parsing structure - that is, a numeric percentage is now replaced by an amount with a decreased value, but otherwise inheriting the properties of the initial amount, whatever they are ("three percent of the drugs which enter the country" - we are still talking about drugs, just a smaller quantity). Some maps require more than just the adjacent objects to determine whether they match - the "by" map for passive voice must wait until the verb or participial phrase is available. Relations that are built by prepositional maps have fixed logical and existential connections - "an employee of the company" does not allow the fine control over the logic and existence connections of the employment relation afforded by verb auxiliaries (the connection states may be modified - "a possible employee of the company" - but are not propagatable, and so are not modifiable by activity in the rest of the structure).

A preposition can create a relation which becomes intertwined with the verb relation -

He cut the rope with a knife

The map for this meaning of with creates a use relation, building from the text a structure which would be more accurately represented in text as

He used a knife to make possible the cutting of the rope, and he cut the rope

When the verb phrase is reached, and there is only one clause in the sentence and the verb phrase is not compound, the logical connection to the relation becomes the logical sentence connection. Verb auxiliaries can intercede and change the location of the connection - "can" changes the logical connection to one of existence - "the plaintiff can sue" is represented by a relation which exists, but whose logical state is unknown, while "the plaintiff may sue" connects to the logical connection of the relation. Verb phrases can be composite, involving infinitives which pick up the same subject or object as the verb relation.

The semantic structure building process is very far from one to one with the text. In most cases, considerable additional structure needs to be built to represent the meaning. Sometimes, it can be quite clumsy to describe in natural language an operation which is simple to represent in the structure. What we haven't mentioned is that, as the semantic structure is being built, it is also being activated in that known objects and states are propagated, and this activation plays a part in its further building. Its full logical activation only occurs when it is linked to the logical control of the sentence.

2. The Elements

2.1. Things

Things acquire their properties by connection. Things consist of entities and relations. Each can have components and attributes, can be members or invocations or alternatives of other things, and can have multiple meanings. Any thing can be connected through a relation. Things have the property of existence, so any thing can be declared to exist or not (or any shade of grey in between).

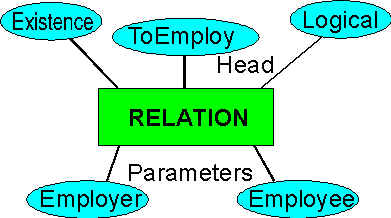

2.2. Relations

Relations have connections which are undirected, so states can flow in or out of the relations, and include logical, existential and time control (time control is exercised through attributes of start time, finish time and duration, any of which can be ranges). The separate connections for truth and existence allow fine control, and remove much of the possibility for confusion that some people have when the two are mixed down one line. The head object can inherit properties for the relation, and may be used as a parameter or connection point for other relations. Some relations are single place - "he was punctual", some involve an overarching transaction relation - A sold B to C, which appears as different two place relations ("buy" and "sell), but actually has three parameters - for a buyer to buy, there must be a seller. If we take a simple two place relation, such as

He committed the crime

The relation is asserted by the sentence to be logically true, which also means the relation must exist. There is no more specific time information than it occurred in the past, so the time attributes of the relation are set to ranges. Verb auxiliaries used in the text are represented by changes to the control connections of the relation, as

He may have committed the crime.

Probability has been introduced on the logical control, and its existence must be at least as possible - the possibility of the existence of the relation cannot be less than the probability of its logical truth. Obviously, existence can be true while the relation is logically false - it is this switching nonlinearity that people can find confusing.

He could not have committed the crime.

The existence of the relation is false (it is asserted not to exist, which doesn't mean it is not there), and this means its logical state is also false (the relation controls the consistency of the states of its connections - it is an active operator in the structure). If the discourse is true, the sentence logical connection is asserted true, the logical state passes through the negation and then is directed to the existence connection of the relation (the verb auxiliary changed the connection site on the relation as part of the building process). The combination of logical, existence and time control allows the representation of all the states in which a relation may find itself. As you can imagine, it would be possible but extremely tedious for a person to describe the many complex interrelations in a legal document in this way, so it becomes essential for the transformation from text to this form of representation to be automatic. Many of the techniques that have been applied to representations of legal text have been about taking short cuts to allow manual creation of the representation, in the hope that they would be sufficient for the purpose at hand. Just as in any other area of knowledge, there is no reward for insufficient effort - it needs to be done in great detail to be successful. It is, when a skilled legal practitioner carefully reads the text. If we are to emulate the reading process successfully, we also should expect that it be done automatically in great detail. Fortunately, this detail can be hidden from the user of the representation.

3. Vignettes

We offer some more glimpses of the workings.

3.1. Inheritance

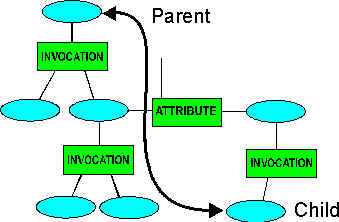

An object can have any number of parents, and the line of inheritance can pass through member operators, invocation operators, alternatives operators and meaning operators. Each of these operators has a logical control, allowing inheritance to be switched. The same logical control is used when matching, to assert that something must or must not have a particular parent or child. Membership is treated as strictly disjoint and matching requiring consistency will fail - a lion is not consistent with a tiger. Invocation does not cause an inconsistency, but the parent does not acquire the properties of its children. For alternatives and meanings, the parent acquires the properties of the children and vice versa as shown in Figure 4 - these operators represent inflections on the line of inheritance.

Figure 4 - Parent and child across a common link

Objects can inherit properties from relations of which they are parameters. It may be that a company or a natural person can own property, but if it is a natural person, then their age must exceed seventeen. The virtual invocation between a particular owner and the definition of property ownership (the invocation of the relation is built, but not of its parameters) will constrain their age. Inheritance through relations, and from multiple meanings, makes the simple formalism of ontologies unsuitable for knowledge of this complexity.

A word like "building" initially has (possible) parents of noun, present participle, physical object (the artifact) and relation (the process). To prevent consistency being mistakenly found between two nouns, an entire line of parentage can be turned off, so all parents that are children of Word will no longer be found. This means that searching for parents must rise right to the top of the hierarchy (typically about six layers) on all the inheritance paths to ensure that no parent object need be excluded, before retreating and including valid parents.

3.2. Generalisation

People generalise when the import is obvious. A sentence such as

The gun's magazine jammed.

Not all guns have magazines, but this one does, so find the appropriate child. The possessive is treated as just another preposition, with maps. The hierarchical structure is searched for parents or children that may have the desired property (this is case-based reasoning, but intertwined with searching of parents for an inherited property). Figure 5 shows an operator joining a parent of the node holding the relation on one side to a child node on the other - parent to parent, parent to child and child to child are all commonly found in text, and any combinations must be searched for to resolve the relation between objects. If a child is found to possess the property, but no parent, the hierarchical connection of the gun object is relocated to the child - the text did not need to be more specific, the meaning can be deduced by the reader. The repositioning of the connection during building avoids a common logical fallacy - a generalisation of an object acquiring different properties from different children. The same repositioning mechanism is used to narrow the meaning of a relation, based on the objects that are its parameters.

3.3. Mixing Logics

The logic of existence and the logic of truth/falsity are nominally separate and distinct, but are usually freely mixed at connectives in legal text - "cannot and will not" for example. It is then necessary that all the conjunctions and implications in the structure be able to handle whichever form of logical state arrives at their various connections. We have mentioned that the structure is undirected - the logical connectives are undirected in operation, a false consequent ensuring a false antecedent as it does in text (but not in most computer logics).

3.4. Intention

In law, much can hinge on the intention surrounding an action or inaction. If we take a sentence such as

He sold his house to pay his debts.

and we ask why did he sell his house, the answer cannot be that paying his debts made him sell his house, because the paying action follows the selling action, and cannot be the cause. Instead, the action of selling made possible the action of paying - he sold his house to make possible the paying of his debts (make possible means the existential connection on the paying relation is true). We have no evidence that he did pay his debts, so the logical connection on the paying relation can be no more than a definite maybe (a logical value somewhere between "we don't know anything" and true), and its time information is in the future. The chain of intention will rarely be as simple as this, but it is much easier to find by separating what happens from what is being made possible.

4. Uses

The system, having read and transformed legal documents into an active structure (at roughly the speed of a careful human reader), can be used in several ways.

4.1. Search

A search string goes through the same transformation into structure as the original text. The matching of the two structures is thus greatly simplified, as words have been turned into entities and relations, and synonyms between entities or relations or higher level structures allow for a much simplified matching process. The transformed structure is not invariant by any means, but a great deal of the apparent textual variability - the difference between active and passive voice, the difference between using nouns or verbs or adjectives or adverbs to say the same thing, or the difference between short, sharp sentences and long, convoluted and rambling ones - has been stripped away, together with the prolix tangles of prepositional chains that make much legal text so forbidding.

4.2. Simulation

The structure represents the text, and the structure can be activated. That is, changes in the states of the objects in the structure will result in the structure moving to a new state which respects the constraints implicit in the text and explicit in the structure. Activation can be occurring at several levels - the user may ask for some state or condition or process, which may require considerable hypothesising by the system to achieve.

4.3. Answering Questions

Not every user will know how to phrase a useful search phrase. Casual users may wish to propose a scenario and ask whether the text is relevant. This requires both search, to find the location in the structure that applies, and simulation to bring the states in the structure into agreement with the scenario. The active structure can do both these things together (and frequently they are intertwined, one depending on the other in small steps, which is why the structure needs to be visible to itself), making it suitable for knowledge dissemination to an audience who would rather not carefully read dense legal text nor think in precise legal terms.

4.4. Consistency Checking

Even lawyers get tired or bored in reading reams of legal text. An assistant which checks that every 't' is dotted (or that an 'i' is crossed) can be of significant value, particularly to avoid complete re-reading after every small change. With its rich logical environment and its ability to activate its structure, inconsistencies at any level of structure or state can be found and reported, including those which are only detectable by cycling the meaning through time.

5. Restrictions and Limitations

As you would expect, the system leans towards the precise. It maintains a strict distinction between "can" and "may" and between "possible" and "probable", while otherwise allowing existence and truth states to be freely intermixed. Membership is strictly disjoint, although this can be avoided by the creation of a separate class for things like ligers. For reasons of efficiency, the smallest computable time unit in a simulation is a day. The structure is currently limited to around a million elements and sentence parsing is currently only implemented for the declarative third person textual form.

Conclusion

The Active Structure paradigm has been developed as a generalised method of representing and activating knowledge in applied science. Its development was predicated on the belief that short cuts in cognitive areas lead to the dead end of invalid reasoning. Another way of saying that if there are meaningful features in the text, then their analogs should appear in the structure that represents the meaning of the text. The paradigm would seem to include many of the techniques currently used, such as case-based reasoning or deontic logic or ontologies, but it is no mere collage of the different methods, instead an attempt at a universal structure that underlies them all, and of which they are small and typically incomplete facets. Its application to legal documents would seem worthwhile, as its use of a dynamically self-modifiable structure to represent text gives it some of the text handling capabilities of a skilled legal practitioner.

References

[1] Introduction to Active Structure

[2] ,[3] Acknownet report - condensed form

[4] WordNet – from the Cognitive Science Laboratory at Princeton University