No matter how many grammar rules there are, or semantic operations using the Semantic Octopus, sooner or later one reaches the point where ambiguity can only be resolved by trying something. This is the rationale and purpose of Constraint Reasoning. The causes of ambiguity:

A word can be a noun or a verb – "costs, needs" – and the local context is not adequate to resolve it.

A word can be an active verb or a participial.

.....the house described in the brochure....

– looks like a participial.The owner of the house described in the brochure how he came to build it

– active verb.A word like "as" or "for" can be a subordinate conjunction (joining two clauses) or just a preposition, but it is not always easy to tell.

A trailing noun phrase can group with an adjacent prepositional noun phrase, or with an object further left. The more that is known about the objects, the more that grouping can be natural. But we can get the situation where near grouping is possible, but far grouping is more natural (a higher similarity).

If there is only one ambiguity in a sentence, it can usually be resolved with a grammar rule or a semantic connection, but having two or more is not unusual. Many situations can be resolved by having patterns that run along the parse chain (see CHECKLEFT). A word can’t be an active verb if there is no feasible subject to its left, no matter how far we look. Similarly, "as" must be a preposition if there is no verb phrase to its right to allow a clause. It is a good idea to eliminate all possibilities with simple checks like these before rolling out the big guns to evaluate overall ambiguity by setting values, because constraint reasoning is expensive in time.

But there will be a hard core of cases where it is necessary to set objects to be particular things and observe the result. When sentences are long – 200 or more words – there can be a dozen centres of ambiguity. That doesn’t mean that 2^12 cases have to be tried – many of the ambiguities will resolve as soon as one of them is set. An obvious initial candidate is one near the middle of the sentence.

It will often be the case that several settings will result in resolution of the sentence – parsing runs all the way to DiscourseSentence – and it then becomes necessary to handle "fitness of purpose" – the sentence may resolve, but the meaning is silly, so discount it against other meanings. Eventually, even this isn’t enough, and more than one meaning needs to be held in the resulting structure, and resolved later.

When parsing fails to complete, the parse chain is searched for ambiguous symbols – NounVerb, InterimVerbPhrase, etc. They are connected to an operator, which will manage the constraint reasoning process. It sets one InterimVerbPhrase symbol to be a participial, say, and activates the BRIDGE operator for the symbol, causing normal parsing to resume. When parsing terminates without reaching DiscourseSentence, it checks for any remaining ambiguous symbols (some will have resolved and become buried in the structure) and continues. If DiscourseSentence has been reached, it stores the settings, undoes all the changes and moves on the next set of settings. After completion, if there is only one successful set, this is used. There may be no successful set, or there may be several sets. If several, these need to be examined for "fitness of purpose".

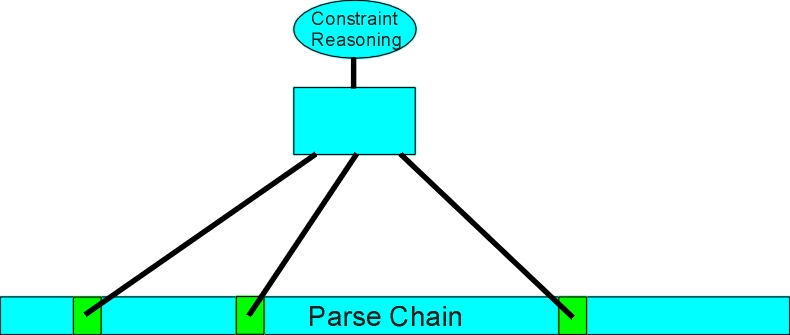

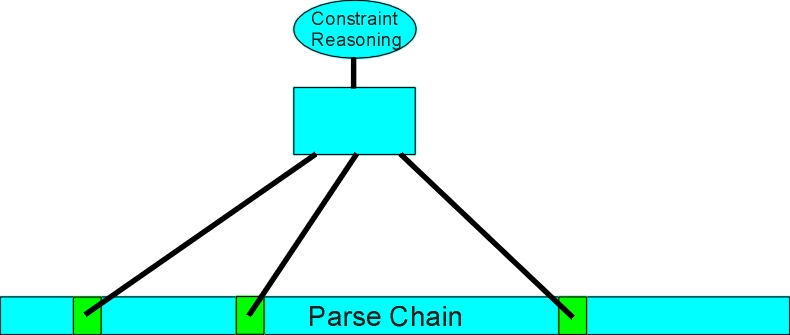

In diagram form (the parse chain of symbols is shown as linear - it is anything but, but the procedure for finding adjacent symbols can make it seem so)

The parse and semantic structure is "backtrackable". That is, whatever structure is built, changed or added to, it can be undone (with the guarantee that every effect is undone, because all effects translate into changes of the structure). The effects can go everywhere – a "such" may have gone back to link to structure built by a previous sentence, or a word may have been added to the dictionary by the action of the sentence running to completion – all these changes backtrack, because the structure does everything (including provide the structure of the dictionary). Backtrack is not "on" during normal parsing - it would be too costly - but normal parsing should never need to backtrack, as its operations are not tentative (although they can be overlaid, which gives a similar result in undoing a symbol built on wrong assumptions). Backtrack is used locally during other phases of parsing, for instance in deciding between prepositional maps.

For statements like "The cat sat on the mat.", this sort of high level intervention is unnecessary, but it becomes essential for long sentences in complex text that carry some worthwhile semantic value. In essence, it is no different to when you find you have to go back and read a sentence again, because it didn’t make sense the first time.