Constraint Reasoning in Parsing

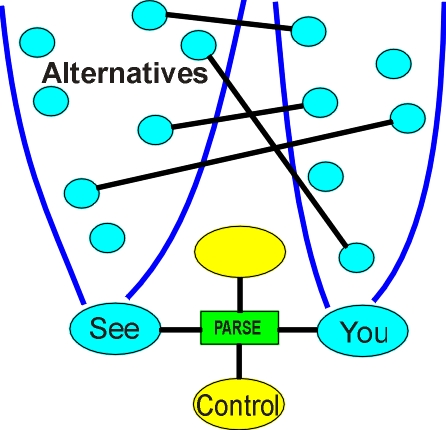

Constraint reasoning is used throughout the parsing and semantic processes. It starts as soon as the parse chain is created. Word objects are strung between PARSE operators, and they are linked to the base vocabulary and its structure, so each word object acquires all the alternatives available to it through its invocations and member functions.

Word Level

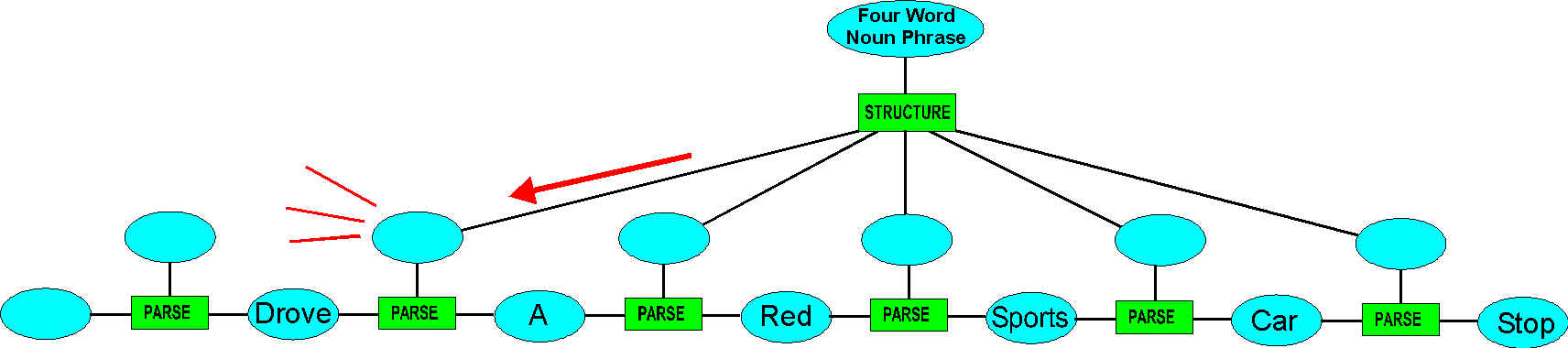

For many words, there are alternative parts of speech – "while" can be a noun, a verb, a (special kind of) adverb. These alternatives would complicate parsing, so as many as possible are removed with reference to the neighbouring words – if the word preceding "while" is the article "a", then "while" cannot be a verb or an adverb. What the PARSE operator does is first find every possible alternative that either side can be, then find every possible pair available from STRUCTURE templates (the templates can have from two to ten legs – only adjacent ones are checked by the PARSE operator - more extensive checking comes later).

The alternatives that have no structure to support them across the operator are

removed.

The alternatives that have no structure to support them across the operator are

removed.

While the diagram indicates that only two words are compared at a time, the change in alternatives is propagated out of the PARSE operator into its neighbour, so the cutting may propagate all along the chain, and become cumulative with other cutting. The result is that each word is compared with at least its two neighbours, and more if cutting crosses the operators.

The STRUCTURE templates are good at adding general cases, but are not good at enumerating a number of special cases that deviate from a general case. One operator (NOTCONSISTENT) behaves as a NOT rather than as an adder of possibilities. If it is encountered from one side, and would link to the other side of the PARSE, it is used to remove alternatives. For example, "being" can be a noun, a verb auxiliary or a present participle. If "being" is the first word in a sentence, then it cannot be a noun. Rather than have multiple structures for every possible case, it is easier to enumerate the few cases that are not possible.

It is important to note that a word's initial neighbours may not be related to it because of discontinuities in the sentence, and pruning based on neighbours (either by PARSE or NOTCONSISTENT) may need to be undone if the topology of the parse chain is changed - see A Pipeline to Failure.Bridge Level

When cutting has settled at the word level, a control pin on the PARSE operator is signalled and it sends values to its symbol pin (pin 1) and so to a BRIDGE operator connected to it. The BRIDGE operator looks for structures that would bridge to nearby symbols (a full match on all the symbols of each STRUCTURE is now performed, so there may be many fewer possibilities).

If no structure is found, the grammar is incomplete or the sentence is ungrammatical. If multiple structures are found, precedence is checked, which may remove some of the patterns. Then either the patterns are insufficiently discriminatory or the sentence is ambiguous. The fault may still clear, based on building by other patterns. If only one possible structure is found, a copy of the STRUCTURE is built across the PARSE symbols, and the new STRUCTURE activates its own BRIDGE operator. The STRUCTURE also reduces the alternatives that the PARSE node is radiating for connection from the other direction, to reflect the symbol it has taken up on one side. Only alternatives that can link to that symbol can be permitted to remain.

At the next level up, symbols on top of structures usually only have one value, but they also acquire alternatives for matching against other structures, and these alternatives need to be constrained to match any connection already made.

At several points in the parsing process, parts of the parse chain are cut out, and the gap healed. This results in different symbols requiring to be propagated along a path, requiring the old symbols to be killed first. Consistent reasoning, the continuous reduction in consistent alternatives, is interspersed with killing of newly invalidated symbols. See Cutting and Healing Dynamic insertion is also being used, to handle all the symbols that are elided by skilled users of the language. A further wrinkle in Constraint Reasoning occurs where grammatical objects acquire new properties as a result of their context. The result is that the parse chain is an extremely dynamic structure, most dissimilar to the conventional approach to Constraint Reasoning.

Sometimes ambiguity can only be resolved by setting one alternative and observing the result, backtracking if there is an error, or comparing the likelihood of one scenario with another. Here is an example:

The tenant evicted by the manager charged with fraud sued for damages.

If we take this pattern

SNP IVP IPP IVP IPP IVP IPP

(SNP – SubjectNounPhrase, IVP – InterimVerbPhrase, IPP – InterimPrepositionalPhrase)

The active verb can occur at any of the IVP positions, as shown by these three examples:

| The tenant sued for damages caused by faulty plumbing contracted with another company for such work in future. |

| The tenant evicted by the manager sued for damages caused by faulty plumbing. |

| The tenant sued for damages caused by faulty plumbing authorised by the landlord. |

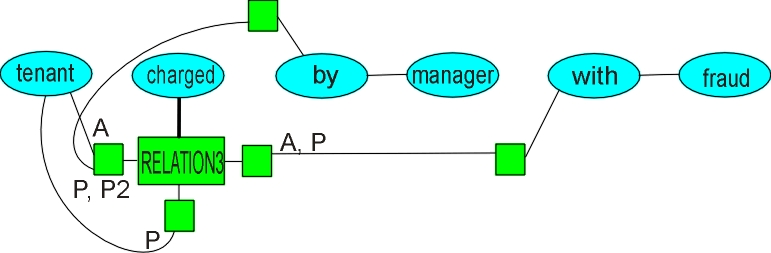

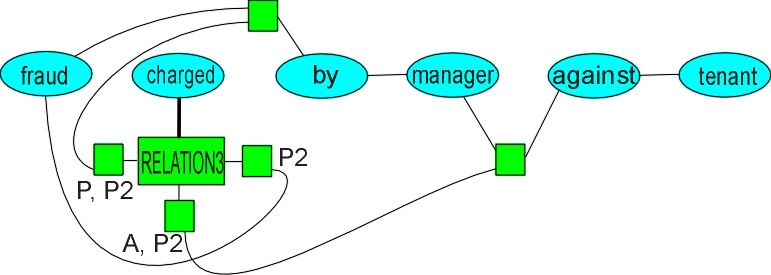

We build a relation for each of the IVPs, making all possible connections to the other

objects in the sentence. For a two-place relation, we have Active and Passive, and for a

three-place, A, P and P2 – passive second object. We set up similar machinery for the

prepositions, giving

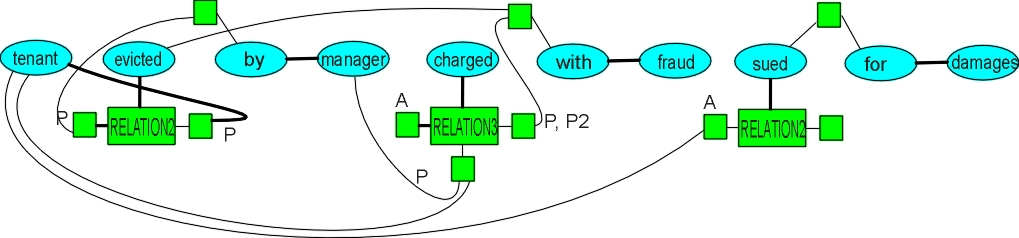

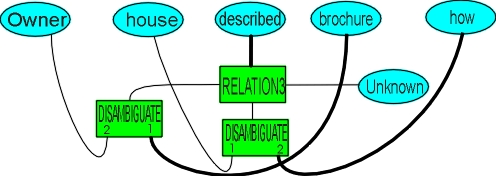

The small green squares are disambiguation operators. They manage both the multiple possible connection points for one type of connection, the difference between Active, Passive and P2 connections, and also keep track of the different senses of relations, as these may allow different sorts of objects to be subject or object of the relation.

If we have a single connection on any object, then it must be that alternative, as no connection would be an error. Here, we have Active and Passive for the connection to "with fraud", as the fragment could be

"tenant charged by manager with fraud" – Passive

"manager charged tenant with fraud" – Active

but the connection cannot be P2, so all P2 alternatives on the relation are removed. The "by manager" can only be a Passive use of "charged" – nothing else is possible - so Active alternatives are removed.

A similar case for passive second object

Only a P2 connection is possible, otherwise "charged" has no left link. If there were a possible left link, such as

........................the house described in the brochure............

The owner of the house described in the brochure the care he took in building it.

Then exploration is necessary further afield. The second example here ends up with an object for "described", making it clear what part of speech it is.

Going back to the original example:

"fraud" can’t be an object of "sued" – must be person. "manager" is noun phrase of preposition – can’t be subject of verb phrase. We also know other things:

"evict" refers to a tenant, not a manager. It is possible that "tenant evicts subtenant", but if we have to make a choice with no other information, "tenant evicted" would result in "evicted" being a participial.

"evicted by manager" – the "by" is a way of linking to the subject for a passive use of "evict", set up by a relation prepositions form. We know what type of object the subject should be – "evicted by the 15th of the month" would fail as the source of the subject. If the preposition is not supplying the subject, there is no other target to the left of tenant. The other uses of "by" – location "by the lake" – not possible, as "evict" is an abstract relation (it does have a location, but that comes through "from the premises"), or "by" meaning "by means of" – this one is possible, as "the landlord evicted (tenants) by unscrupulous means whenever the rental market rose". What we can say is that the passive use is most likely, with a small probability of some other construction, such as

The subtenant was troublesome. The tenant evicted by the manager using a subterfuge.

But small probabilities are possible and in hundreds of statements they mount up, and we need a high accuracy overall, so we need to be more sure. We can do this by creating a structure that holds all the possibilities and then generating each in turn by hard setting and removing invalidated structure.

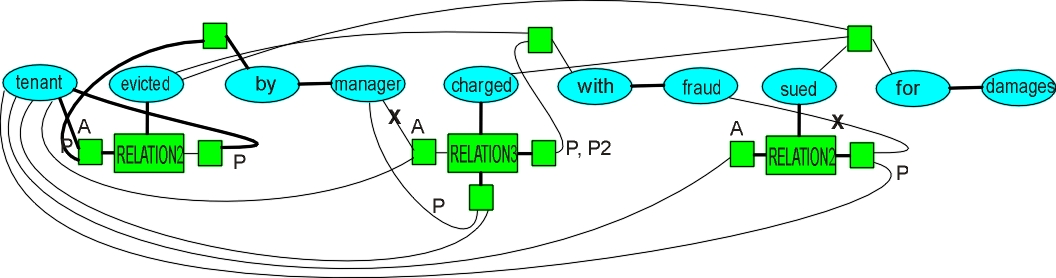

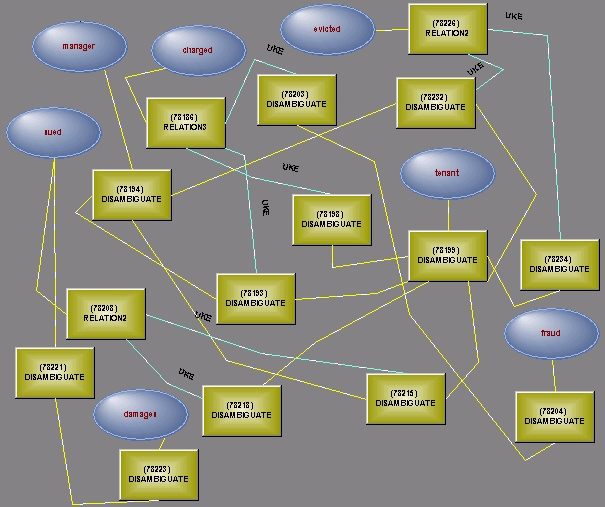

Here is a view of the structure as built. What is not shown is the marking of the links (A, P etc. and the relation sense that created them – different senses may allow different types of object) and their orientation – links from object to object are oriented left to right to match the ordering in the sentence, making it easy to remove links that span an active verb.

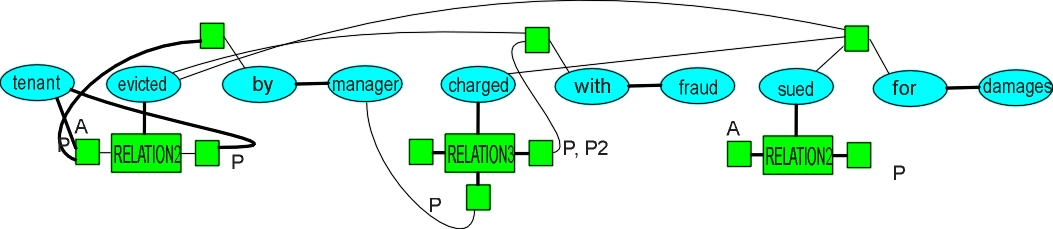

Let’s say we make "evicted" an active verb. No link from a participial or prepositional can cross the active verb, unless there is a relative pronoun to its left, so "tenant" now can’t be the object of "charged". We remove those links, giving

Now "sued" has no link from its left – the alternative of making "evicted" the active verb fails. We do the same for "charged", again get "sued" with no link to the left. If we make "sued" the active verb:

We have no dangling objects. We have two possible targets for "with fraud" – use the closer, better fit – ToCharge has a BaseRelation as second object using "with", ToEvict does not.

"charged" could go with tenant or manager – use the closer one if no other preference. If there had been a comma or a conjunction,

The tenant, evicted by the manager and charged with fraud, sued for damages.

then we would have rolled up the two participials into an object group and pointed both at "tenant".

We cannot guarantee there is an active verb in the sentence – it might have been a heading which said

12) Tenant Evicted

If the tenant is evicted then......................

We guard against sentence fragments as headings, and use best fit – here the "Tenant" is more likely to be the object than the subject.

The example illustrates several things:

| The dense interconnection necessary to represent all possible connections in a relatively simple sentence. | |

| The strong link between semantics and structure grammar – the fact that "fraud" can’t be an object of ToSue meant that several alternatives were pruned. | |

| The power of structure grammar – forcing an InterimVerbPhrase to be active cuts links that span it, which cause further cutting and failure of the alternative. | |

| The complexity that three-place relations introduce. |

The Generate and Test of Constraint Reasoning is sitting on a mountain of deterministic analysis, and provides the necessary coup de grāce.

There could be an argument here which says - just create all the possible parses and let something else get rid of the junk. It is a good rule not to create what you already know (or can easily find out) to be garbage. The other point is that the downstream "thing" would have to do exactly the same process – generate possibilities and flow the consequences, so nothing would be gained by creating a lot of useless alternative structures, and it might as well be done at the earliest point it is possible to do so.

Structure Matching Using Constraint Reasoning

The growth of the parsing structure also provides the structure for resolution of meaning. Relations and prepositions have their meanings represented by maps - a map for percentage of quantity looks like

The map contains instructions for which parts need to be matched, which parts represent actualisation of virtual structure, and which parts are built from the map. The map is not static, as it creates a new object which replaces the original object as part of its operation. Many maps "map forward", in that the map changes as it is matched against the structure - matching the subject of a relation will change the kinds of (verb) objects the relation can have.

Each preposition or relation may have between one and twenty meanings, so a sentence with several clauses containing verb relations, each with half a dozen prepositional or participial phrases , gets to have a huge range of possibilities - precisely why so many prepositional phrases are used, their successful resolution providing a very specific context. Many of the possibilities can be immediately pruned (for most prepositions by looking at the types of objects on either side), but there will still be many combinations left, some of which will involve rearrangement of the sentence structure.

When matching maps to structure, we need all the techniques of constraint reasoning. We are dealing in sets, influences may come from many directions, we may need to suspend a job while we wait for information, in producing a new set for another node we may change the set at the node we are sitting on. It is complicated by the fact that matching may be needed against parents or children, and the map may be changing under us as states are transmitted through it. These problems start as soon as we move away from the single operator joining two points.

A simple example

A single map may have as few as one input, and as many as four. The particular map shown has two input points, which correspond to the objects generated by the words in the text on either side of a preposition. When these objects are propagated in the map, they encounter operators whose other connections are unknown (but may be connected further).

If no set is available for the next node (a set that would be used in

reducing the possibilities across the operator), a new set is generated and placed into

the link connected to the node, as shown in the diagram:

If no set is available for the next node (a set that would be used in

reducing the possibilities across the operator), a new set is generated and placed into

the link connected to the node, as shown in the diagram:

The nodes with new information in their links are put on a queue. When they are encountered in the queue, any incoming sets of objects are anded, and the sets of objects are radiated from the node. Anding has to take into account what is consistent with what – a child is consistent with its parent (and replaces it). This process of set propagation continues until no further cutting is possible.

The possible outcomes of the process:

- A hard error occurred – either a matching failure (no ATTRIBUTES operator was found to match the map, or the object does not have the right parent) or an inconsistency was generated by transmission of states (he owns a car or a house - the failure on car meant he had to own a house). The meaning represented by the map is inconsistent with the structure produced by the text, so the meaning alternative can be discarded.

- Cutting did not complete, leaving sets of objects in the path. This indicates uncertainty about the match (and means we cannot convert the text into specific structure). The meaning remains as an alternative and may be cut later with better information.

- Cutting completed successfully, with no sets left in the path. The meaning matched the structure generated by the parser. At completion, one or more of the input objects may have been converted into one of its children, or a new object may have been created to replace one of the inputs (as an example, "3% of the amount" implies a new amount object).

The approach allows several adjacent maps to be combined when there is insufficient information in any one of the maps to complete cutting, or, in the extreme, a combined map of the whole sentence.

One difficulty is that there is only one map for each meaning, and there may be two prepositional phrases next to each other and requiring the same meaning to be tried in each simultaneously (an attribute of an attribute, say – this particular case would have resolved immediately).

Usually, in Constraint Reasoning, there is direct connection among the sources of alternatives. In some areas, particularly time management, this would be a clumsy representation, and a more fluid approach comes from using diffuse operators.

Meaning Level

Say we have the sentence

The owner sold the house described in the brochure.

We have to find out what "described" is - is it a verb phrase or a participial. We could assume that "house" before it and "in the brochure" after it guaranteed a passive use (the brochure describes the house), so it is a participial, but

The owner of the house described in the brochure how he came to build it.

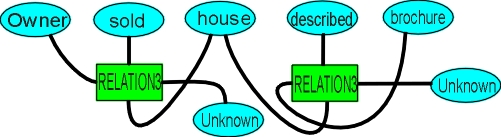

Here, "described", with the same local environment, becomes (after a bit of a double take) the verb, so we have to look further afield - we have to set up possibilities and eliminate them (the use of prepositional phrases in this way may seem clumsy, but the need to make multiple connections in legal and scientific text causes them to be poked in everywhere). We can visualise the setting up of alternatives as

If we use pattern 1, we have no link to "how", so it cannot be this pattern. We remove it, and only pattern 2 is left. This is a simple case, but the principle, of setting up connections and seeing which one is most effective, is general. For the first time, it brings in hypothesising, as asserting the existence of one pattern may force the nonexistence of another. By using chain grammar (jump connections block access by other connections), the disambiguation process using "generate and test" can rearrange the topology quite effectively. Sometimes there is no alternative but to build ambiguity in the final structure.

Conclusion

As one would expect, there is Constraint Reasoning occurring at many levels in the parsing and meaning resolution processes. The parsing process relies on putting in every possible alternative, then taking most of them out again by using reasoning from general cases implemented as structure templates, or by looking up special cases that are accessed based on word combinations. Some of the reasoning consists of adding alternatives based on the context - unusual for constraint reasoning.

The semantic process uses the structure supplied by parsing, and then develops within it another constraint reasoning problem, this time to do with the meanings supported by relations and prepositions. The different meanings are represented by maps, each of which need to be matched locally but also assembled into a larger map that represents the whole sentence.

The full range of Orion's Constraint Reasoning is involved - the dynamic construction of structure, the killing of values before overlaying them with other inconsistent ones, the hypothesising of values and structural backtracking. What is unique is the handling of objects and meanings as part of the constraint reasoning process.

To handle the full gamut of situations encountered in NLP, Orion's Constraint Reasoning has been extended to handle the dynamic addition of properties and structural rearrangement, as well as the dynamic pruning of properties.

Constraint Reasoning is also used in searching of text - see Searching Free Text. In this case, Constraint Reasoning has to expand its search, through synonyms and synonymical relations with different logical states and mapping of parameters, and resolution of anaphora.

See

Seeing Alternatives More Clearly